A Sneak Peek at SeaORM 2.0

SeaORM 1.0 debuted on 2024-08-04. Over the past year, we've shipped 16 minor releases - staying true to our promise of delivering new features without compromising stability.

While building new features in 1.0, we often found ourselves bending over backwards to avoid breaking changes, which meant leaving in a few bits that aren't exactly elegant, intuitive, or frankly, "footgun".

To make SeaORM friendlier and more intuitive for newcomers (and a little kinder to seasoned users too), we've decided it's time for a 2.0 release - one that embraces necessary breaking changes to clean things up and set a stronger foundation for the future.

1.0 New Features

If you haven't been following every update, here's a quick tour of some quality-of-life improvements you can start using right now. Otherwise, you can skip to the 2.0 section.

Nested Select

This is the most requested feature by far, and we've implemented nested select in SeaORM. We've added nested alias and ActiveEnum support too.

use sea_orm::DerivePartialModel;

#[derive(DerivePartialModel)]

#[sea_orm(entity = "cake::Entity", from_query_result)]

struct CakeWithFruit {

id: i32,

name: String,

#[sea_orm(nested)]

fruit: Option<Fruit>,

}

#[derive(DerivePartialModel)]

#[sea_orm(entity = "fruit::Entity", from_query_result)]

struct Fruit {

id: i32,

name: String,

}

let cakes: Vec<CakeWithFruit> = cake::Entity::find()

.left_join(fruit::Entity)

.into_partial_model()

.all(db)

.await?;

PartialModel -> ActiveModel

DerivePartialModel got another extension to derive IntoActiveModel additionally. Absent attributes will be filled with NotSet. This allows you to use partial models to perform insert / updates as well.

#[derive(DerivePartialModel)]

#[sea_orm(entity = "cake::Entity", into_active_model)]

struct PartialCake {

id: i32,

name: String,

}

let partial_cake = PartialCake {

id: 12,

name: "Lemon Drizzle".to_owned(),

};

// this is now possible:

assert_eq!(

cake::ActiveModel {

..partial_cake.into_active_model()

},

cake::ActiveModel {

id: Set(12),

name: Set("Lemon Drizzle".to_owned()),

..Default::default()

}

);

Insert active models with non-uniform column sets

Insert many now allows active models to have different column sets. Previously, it'd panic when encountering this. Missing columns will be filled with NULL. This makes seeding data a seamless operation.

let apple = cake_filling::ActiveModel {

cake_id: ActiveValue::set(2),

filling_id: ActiveValue::NotSet,

};

let orange = cake_filling::ActiveModel {

cake_id: ActiveValue::NotSet,

filling_id: ActiveValue::set(3),

};

assert_eq!(

Insert::<cake_filling::ActiveModel>::new()

.add_many([apple, orange])

.build(DbBackend::Postgres)

.to_string(),

r#"INSERT INTO "cake_filling" ("cake_id", "filling_id") VALUES (2, NULL), (NULL, 3)"#,

);

Support Postgres PgVector & IpNetwork

Under feature flag postgres-vector / with-ipnetwork.

#[derive(Clone, Debug, PartialEq, DeriveEntityModel)]

#[sea_orm(table_name = "demo_table")]

pub struct Model {

#[sea_orm(primary_key)]

pub id: i32,

pub embedding: PgVector,

pub ipaddress: IpNetwork,

}

2.0 New Features

These are small touch‑ups, but added up they can make a big difference.

Nested Select on any Model

#2642 Wait... we've seen this before? No, there is a small detail here: now every Model can be used in nested select! This requires a small breaking change to basically derive PartialModelTrait on regular Models. And also notice the removed from_query_result.

use sea_orm::DerivePartialModel;

#[derive(DerivePartialModel)]

#[sea_orm(entity = "cake::Entity")] // <- from_query_result not needed

struct CakeWithFruit {

id: i32,

name: String,

#[sea_orm(nested)]

fruit: Option<fruit::Model>, // <- this is just a regular Model

}

let cakes: Vec<CakeWithFruit> = cake::Entity::find()

.left_join(fruit::Entity)

.into_partial_model()

.all(db)

.await?;

Wrapper type as primary key

#2643 Wrapper type derived with DeriveValueType can now be used as primary key. Now you can embrace Rust's type system to make your code more robust!

#[derive(Clone, Debug, PartialEq, Eq, DeriveEntityModel)]

#[sea_orm(table_name = "my_value_type")]

pub struct Model {

#[sea_orm(primary_key)]

pub id: MyInteger,

}

#[derive(Clone, Debug, PartialEq, Eq, DeriveValueType)]

pub struct MyInteger(pub i32);

// only for i8 | i16 | i32 | i64 | u8 | u16 | u32 | u64

Multi-part unique keys

#2651 You can now define unique keys that span multiple columns in Entity.

#[derive(Clone, Debug, PartialEq, Eq, DeriveEntityModel)]

#[sea_orm(table_name = "lineitem")]

pub struct Model {

#[sea_orm(primary_key)]

pub id: i32,

#[sea_orm(unique_key = "item")]

pub order_id: i32,

#[sea_orm(unique_key = "item")]

pub cake_id: i32,

}

let stmts = Schema::new(backend).create_index_from_entity(lineitem::Entity);

assert_eq!(

stmts[0],

Index::create()

.name("idx-lineitem-item")

.table(lineitem::Entity)

.col(lineitem::Column::OrderId)

.col(lineitem::Column::CakeId)

.unique()

.take()

);

assert_eq!(

backend.build(stmts[0]),

r#"CREATE UNIQUE INDEX "idx-lineitem-item" ON "lineitem" ("order_id", "cake_id")"#

);

Allow missing fields when using ActiveModel::from_json

#2599 Improved utility of ActiveModel::from_json when dealing with inputs coming from REST APIs.

Consider the following Entity:

#[derive(Clone, Debug, PartialEq, Eq, DeriveEntityModel, Serialize, Deserialize)]

#[sea_orm(table_name = "cake")]

pub struct Model {

#[sea_orm(primary_key)]

pub id: i32, // <- not nullable

pub name: String,

}

Previously, the following would result in error missing field "id". The usual solution is to add #[serde(skip_deserializing)] to the Model.

assert!(

cake::ActiveModel::from_json(json!({

"name": "Apple Pie",

})).is_err();

);

Now, the above will just work. The ActiveModel will be partially filled:

assert_eq!(

cake::ActiveModel::from_json(json!({

"name": "Apple Pie",

}))

.unwrap(),

cake::ActiveModel {

id: NotSet,

name: Set("Apple Pie".to_owned()),

}

);

How does it work under the hood? It's actually quite interesting. This requires a small breaking to the trait bound of the method.

2.0 Exciting New Features

We've planned some exciting new features for SeaORM too.

Ergonomic Raw SQL

While already explained in detail in a previous blog post, we've integrated the raw_sql! macro nicely into SeaORM. It's like the format! macro but without the risk of SQL injection. It supports nested parameter interpolation, array and tuple expansion, and even repeating group!

It's not a ground-breaking new feature, as similar functions exist in other dynamic languages. But it does unlock exciting new ways to use SeaORM. After all, SeaORM isn't just an ORM; it's a flexible SQL toolkit you can tailour to your own programming style. Use it as a backend-agnostic SQLx wrapper, SeaQuery with built-in connection management, or a lightweight ORM with enchanted raw SQL. The choice is yours!

Find Model by raw SQL

let item = Item { id: 1 };

let cake: Option<cake::Model> = cake::Entity::find()

.from_raw_sql(raw_sql!(

Postgres,

r#"SELECT "cake"."id", "cake"."name" FROM "cake" WHERE "id" = {item.id}"#

))

.one(&db)

.await?;

Find custom Model by raw SQL

#[derive(FromQueryResult)]

struct Cake {

name: String,

#[sea_orm(nested)]

bakery: Option<Bakery>,

}

#[derive(FromQueryResult)]

struct Bakery {

#[sea_orm(alias = "bakery_name")]

name: String,

}

let cake_ids = [2, 3, 4]; // expanded by the `..` operator

let cake: Option<Cake> = Cake::find_by_statement(raw_sql!(

Sqlite,

r#"SELECT "cake"."name", "bakery"."name" AS "bakery_name"

FROM "cake"

LEFT JOIN "bakery" ON "cake"."bakery_id" = "bakery"."id"

WHERE "cake"."id" IN ({..cake_ids})"#

))

.one(db)

.await?;

Paginate raw SQL query

You can paginate SelectorRaw and fetch Model in batches.

let ids = vec![1, 2, 3, 4];

let mut cake_pages = cake::Entity::find()

.from_raw_sql(raw_sql!(

Postgres,

r#"SELECT "cake"."id", "cake"."name" FROM "cake" WHERE "id" IN ({..ids})"#

))

.paginate(db, 10);

while let Some(cakes) = cake_pages.fetch_and_next().await? {

// Do something on cakes: Vec<cake::Model>

}

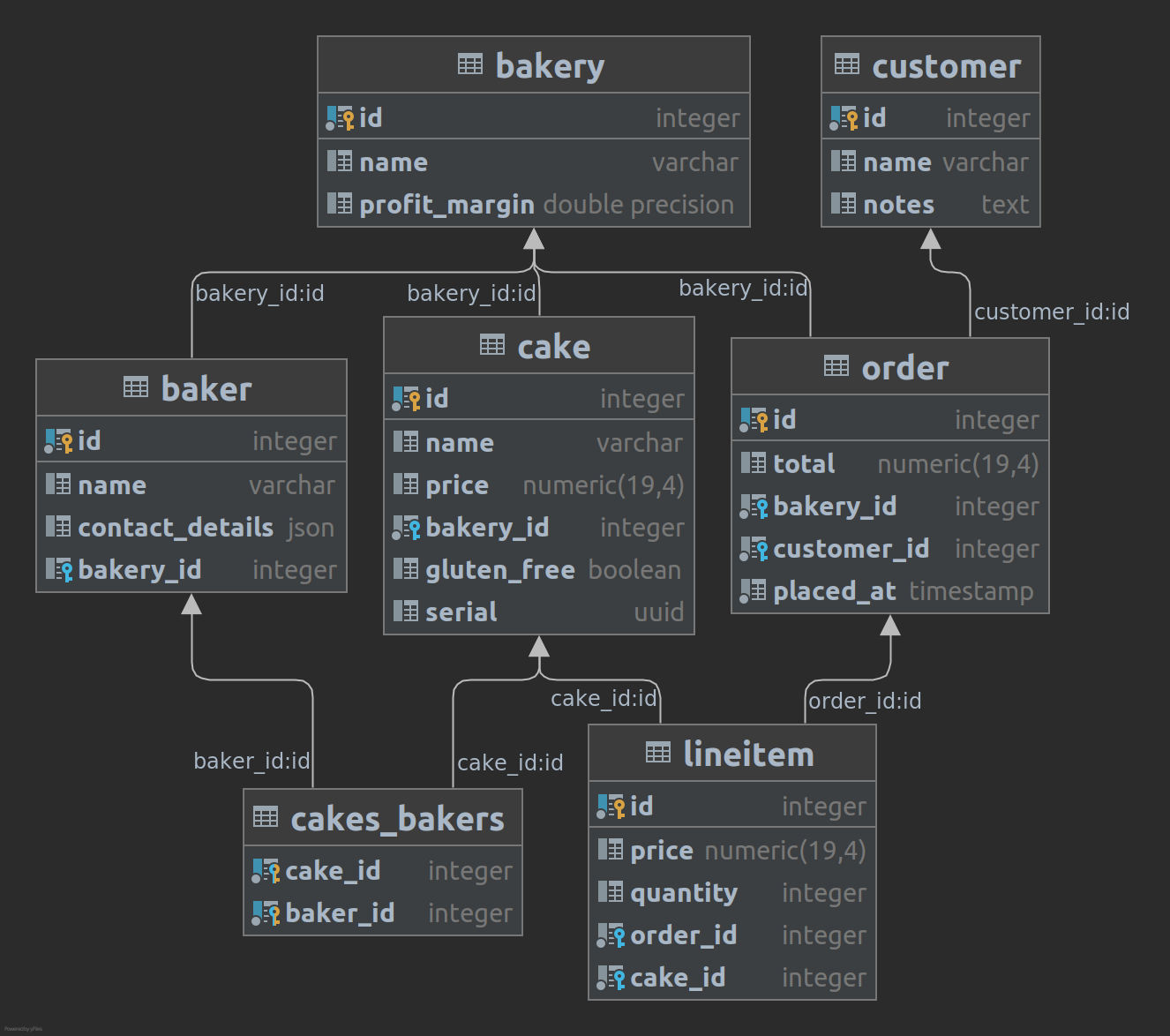

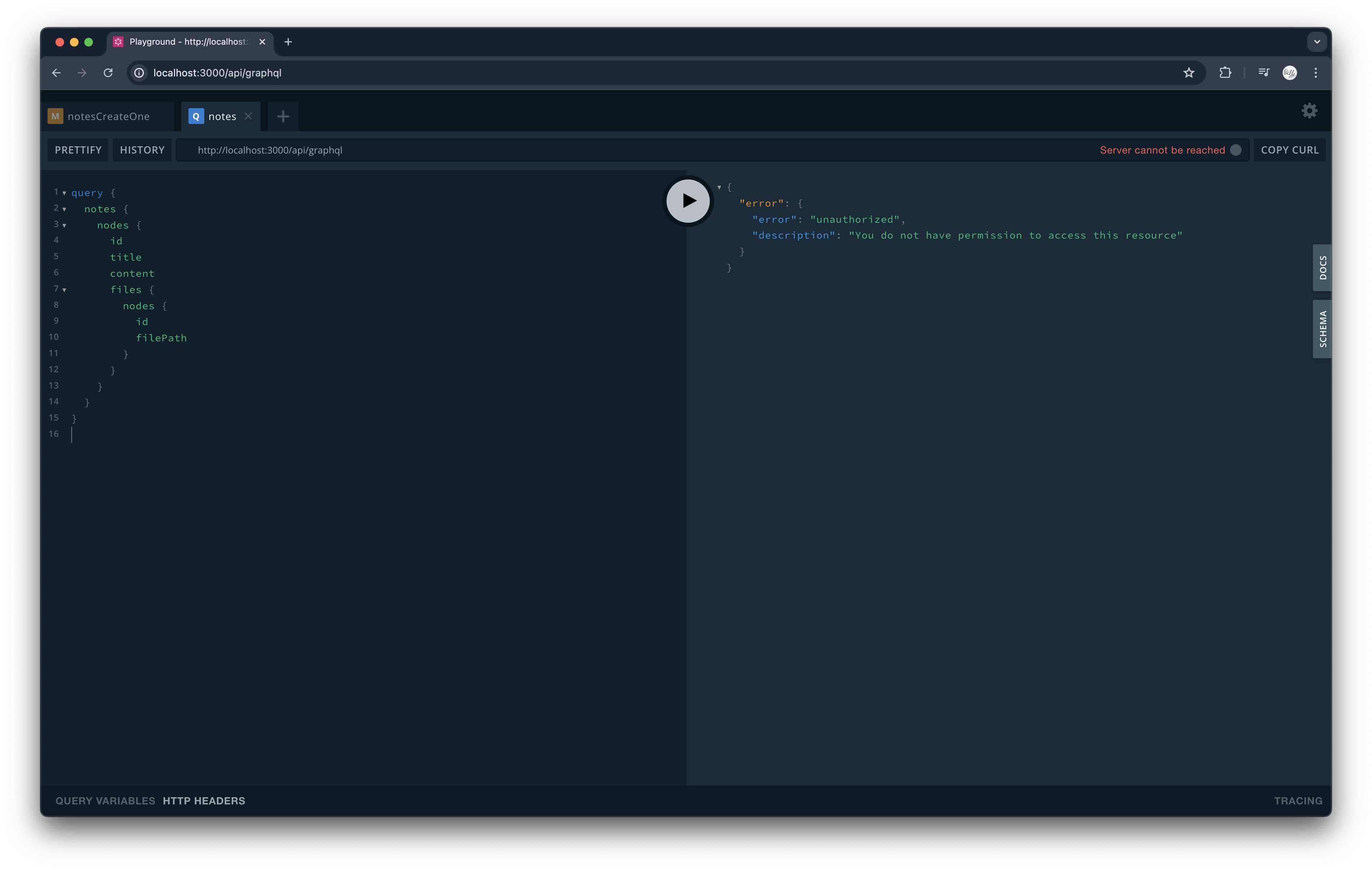

Role Based Access Control

#2683 We will cover this in detail in a future blog post, but here's a sneak peek.

SeaORM RBAC

- A hierarchical RBAC engine that is table scoped

- a user has 1 (and only 1) role

- a role has a set of permissions on a set of resources

- permissions here are CRUD operations and resources are tables

- but the engine is generic so can be used for other things

- roles have hierarchy, and can inherit permissions from multiple roles

- there is a wildcard

*(opt-in) to grant all permissions or resources - individual users can have rules override

- A set of Entities to load / store the access control rules to / from database

- A query auditor that dissect queries for necessary permissions (implemented in SeaQuery)

- Integration of RBAC into SeaORM in form of

RestrictedConnection. It implementsConnectionTrait, behaves like a normal connection, but will audit all queries and perform permission check before execution, and reject them accordingly. All Entity operations except raw SQL are supported. Complex nested joins,INSERT INTO SELECT FROM, and even CTE queries are supported.

// set the rules

rbac.add_roles(&db_conn, &["admin", "manager", "public"]).await?;

rbac.add_role_permissions(&db_conn, "admin", &["create"], &["bakery"]).await?;

// load rules from database

db_conn.load_rbac().await?;

// admin can create bakery

let db: RestrictedConnection = db_conn.restricted_for(admin)?;

let seaside_bakery = bakery::ActiveModel {

name: Set("SeaSide Bakery".to_owned()),

..Default::default()

};

assert!(Bakery::insert(seaside_bakery).exec(&db).await.is_ok());

// manager cannot create bakery

let db: RestrictedConnection = db_conn.restricted_for(manager)?;

assert!(matches!(

Bakery::insert(bakery::ActiveModel::default())

.exec(&db)

.await,

Err(DbErr::AccessDenied { .. })

));

// transaction works too

let txn: RestrictedTransaction = db.begin().await?;

baker::Entity::insert(baker::ActiveModel {

name: Set("Master Baker".to_owned()),

bakery_id: Set(Some(1)),

..Default::default()

})

.exec(&txn)

.await?;

txn.commit().await?;

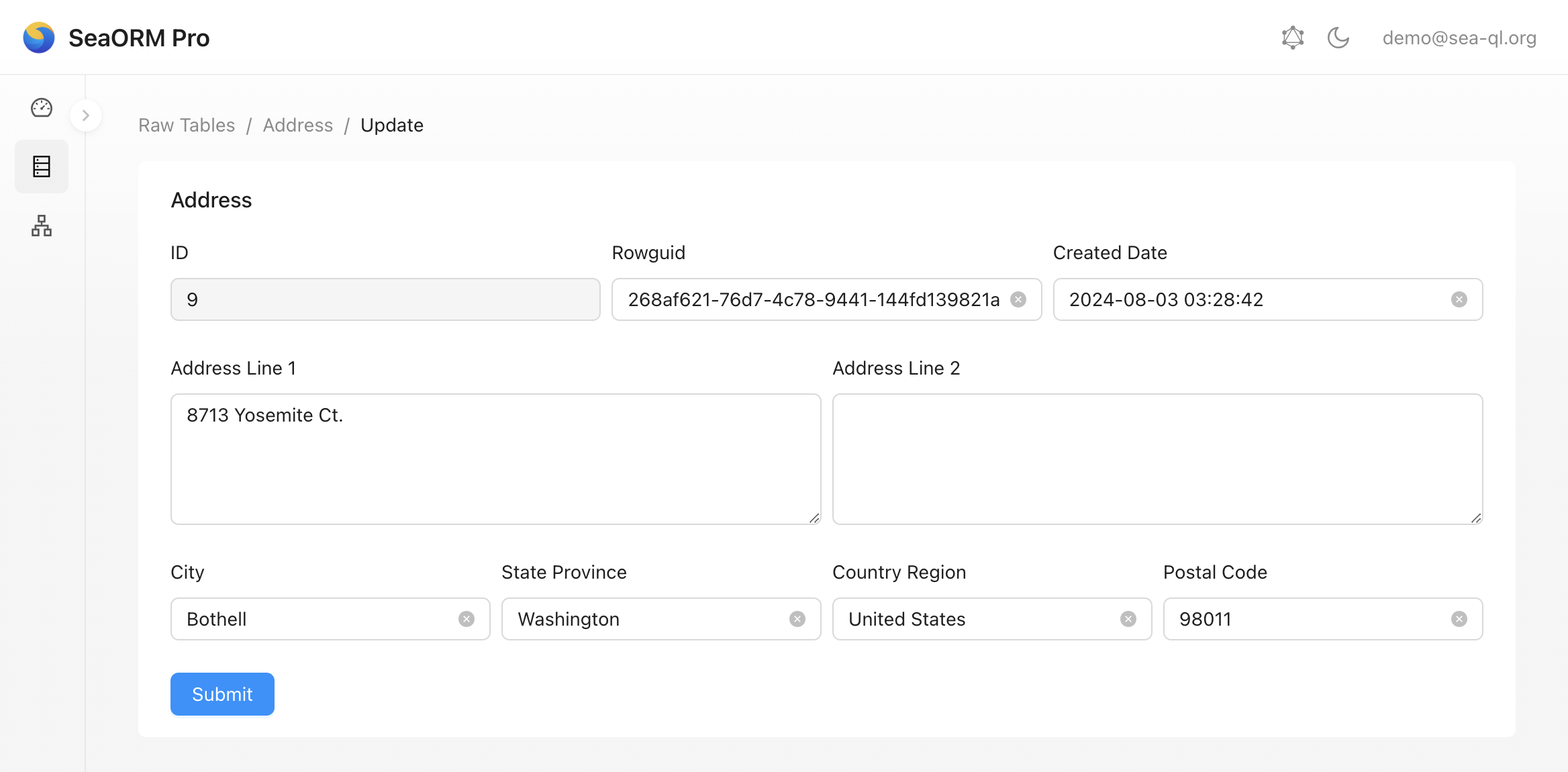

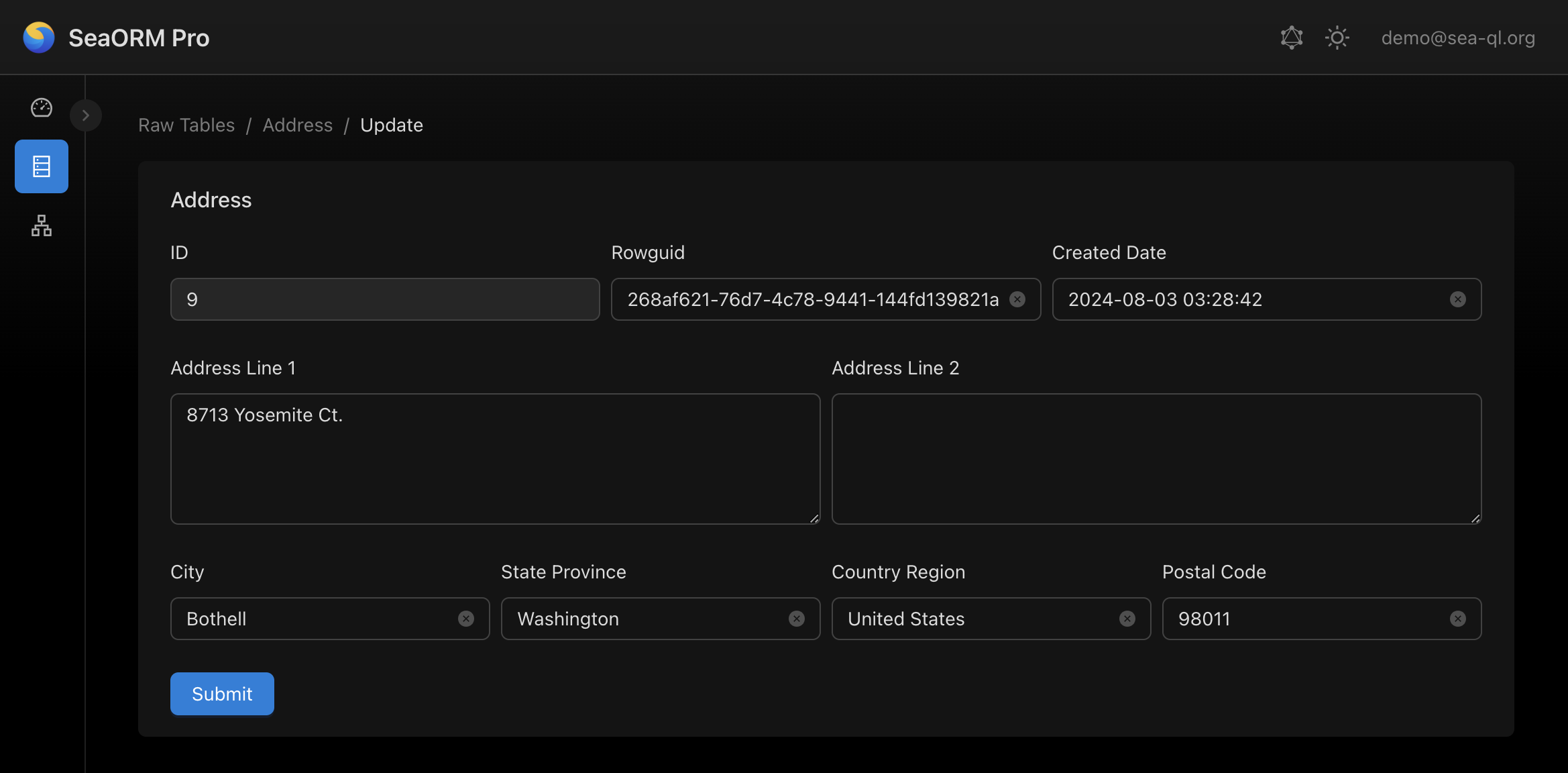

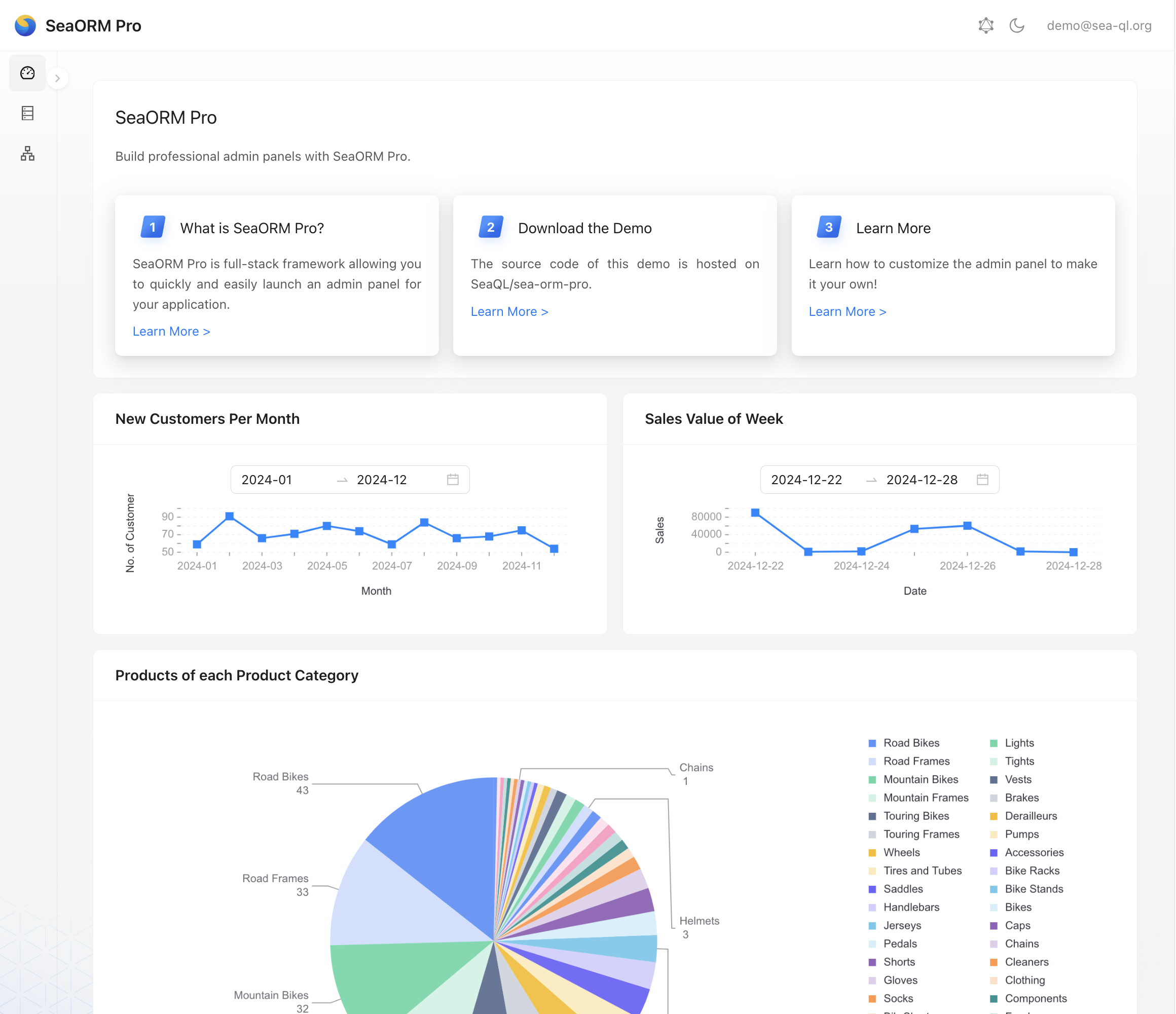

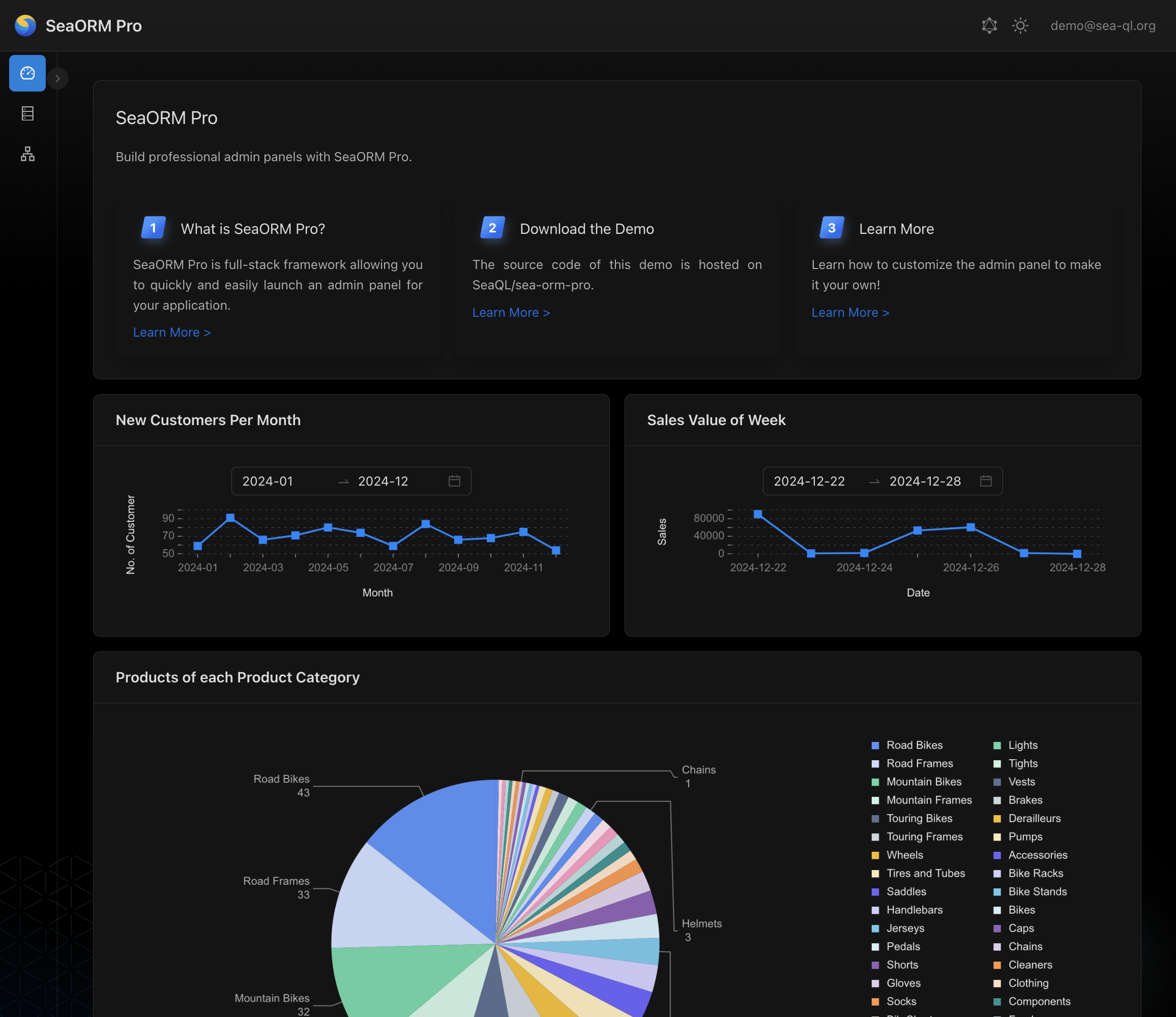

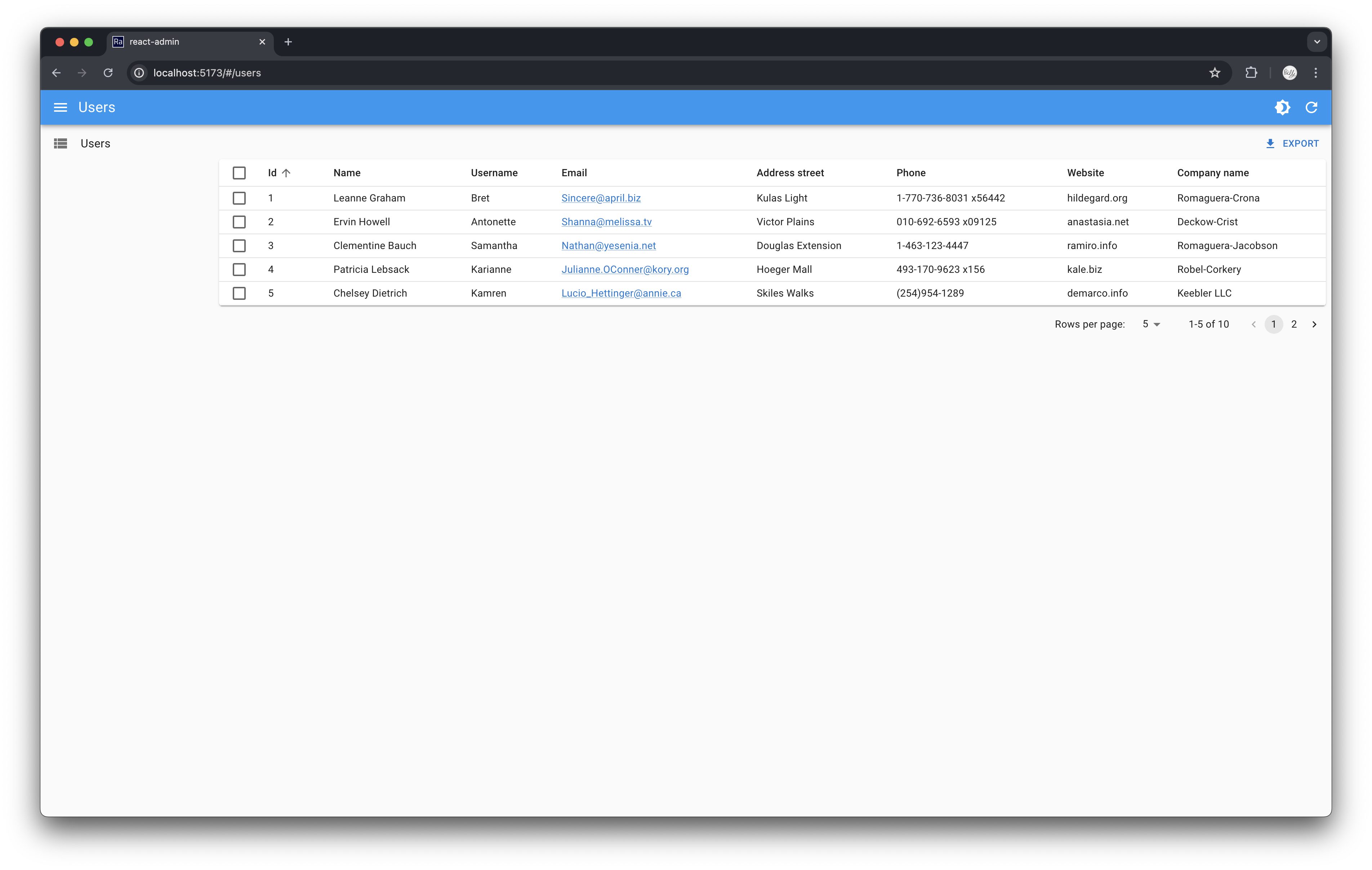

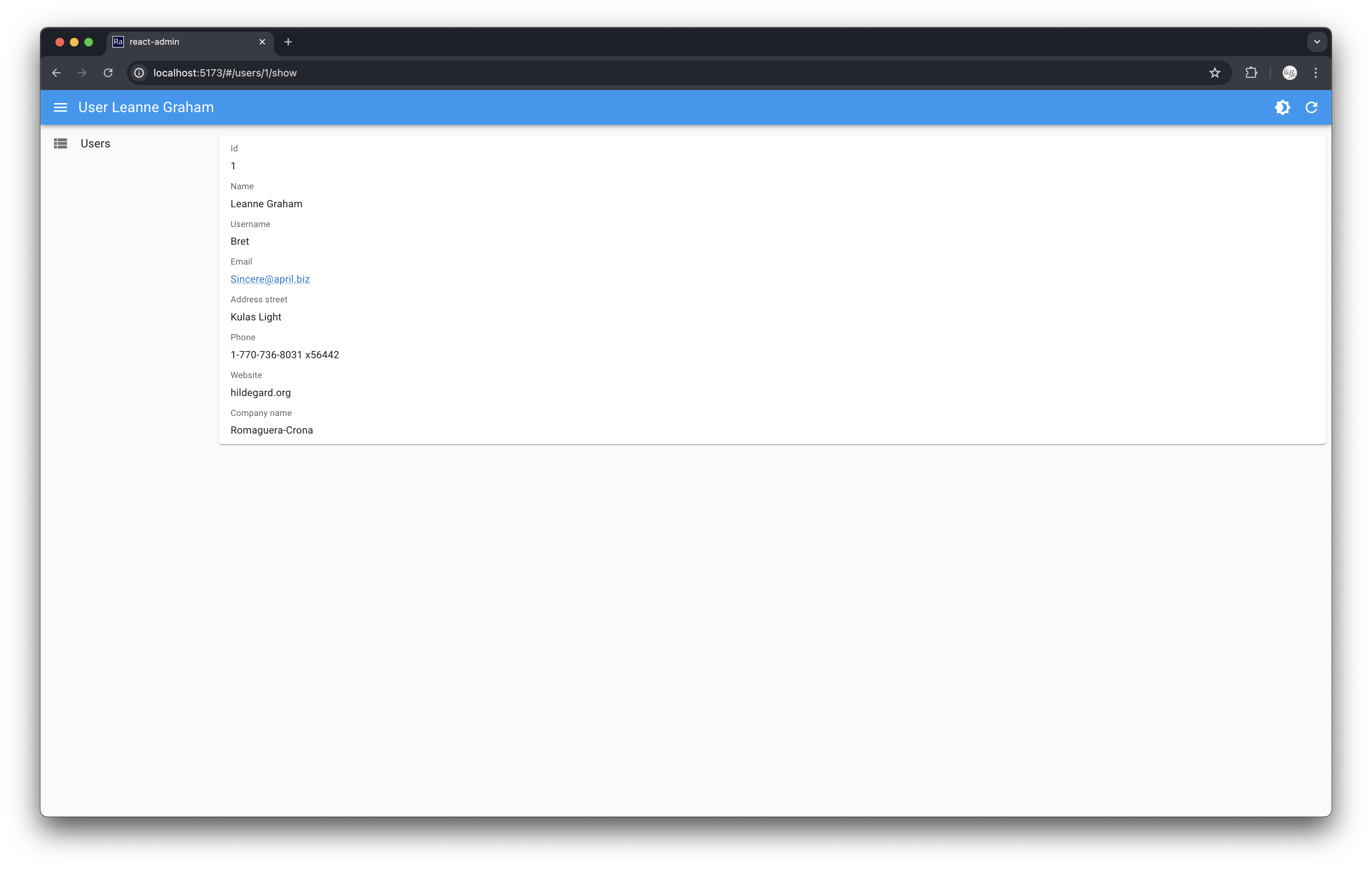

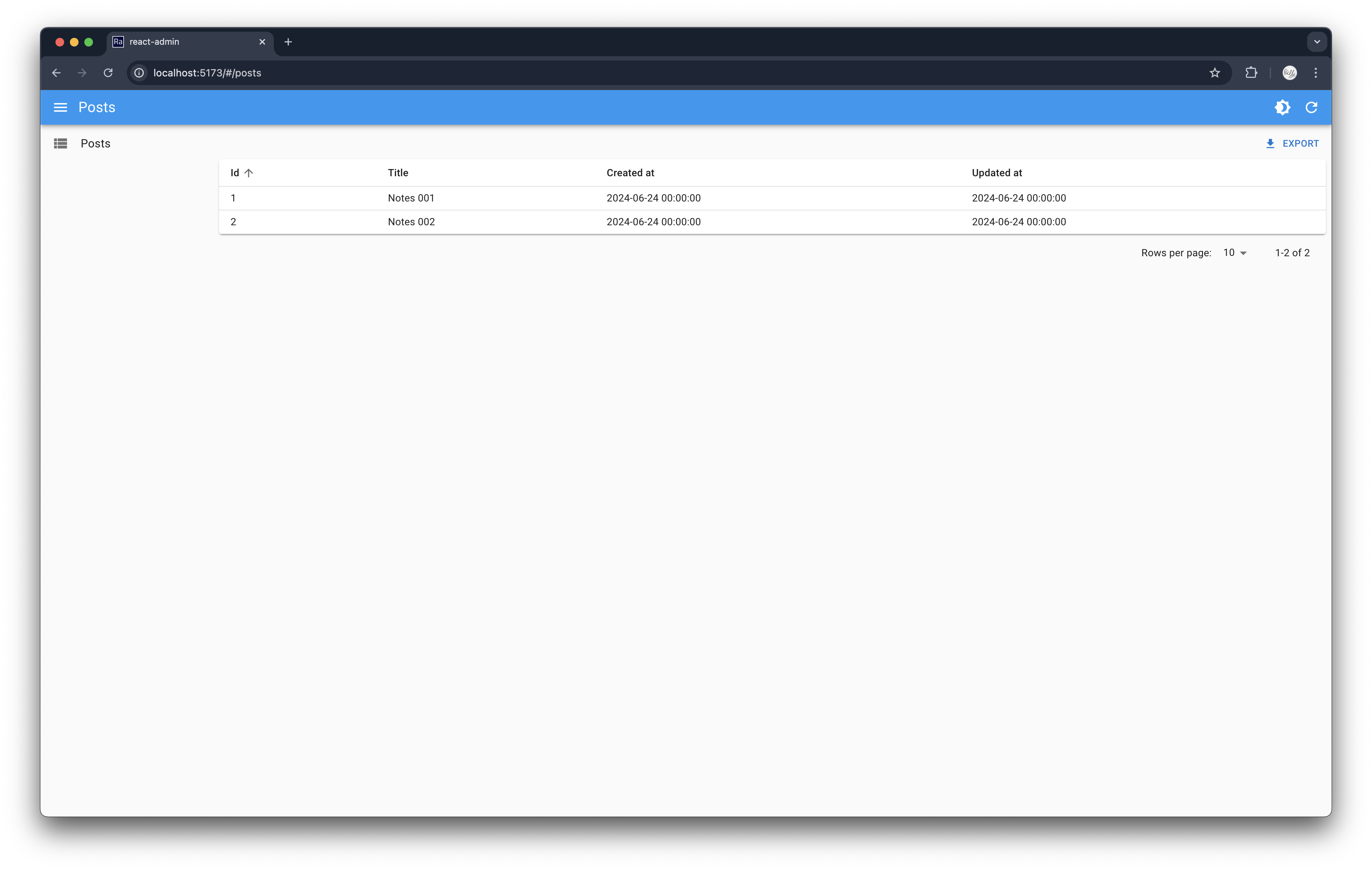

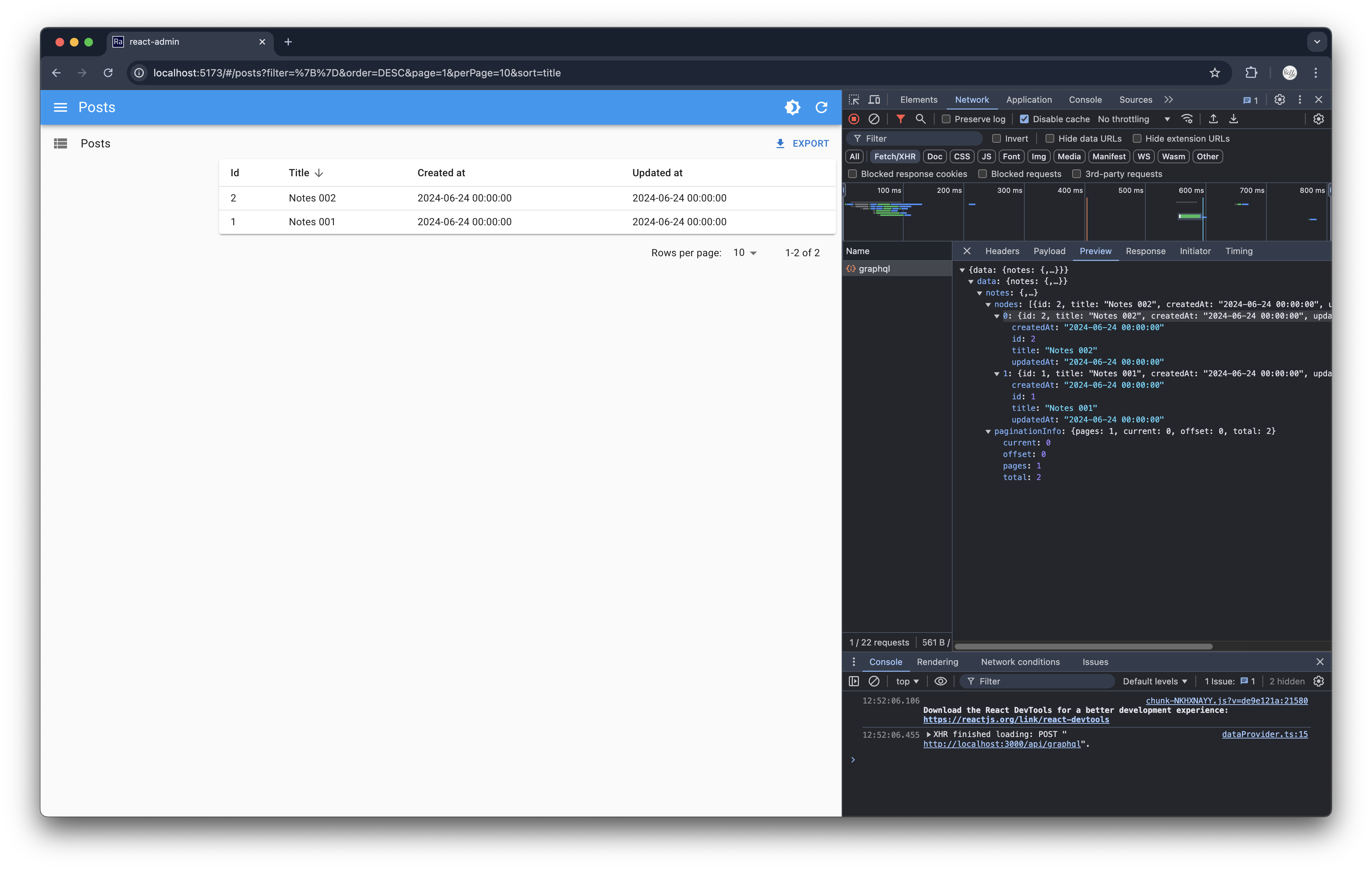

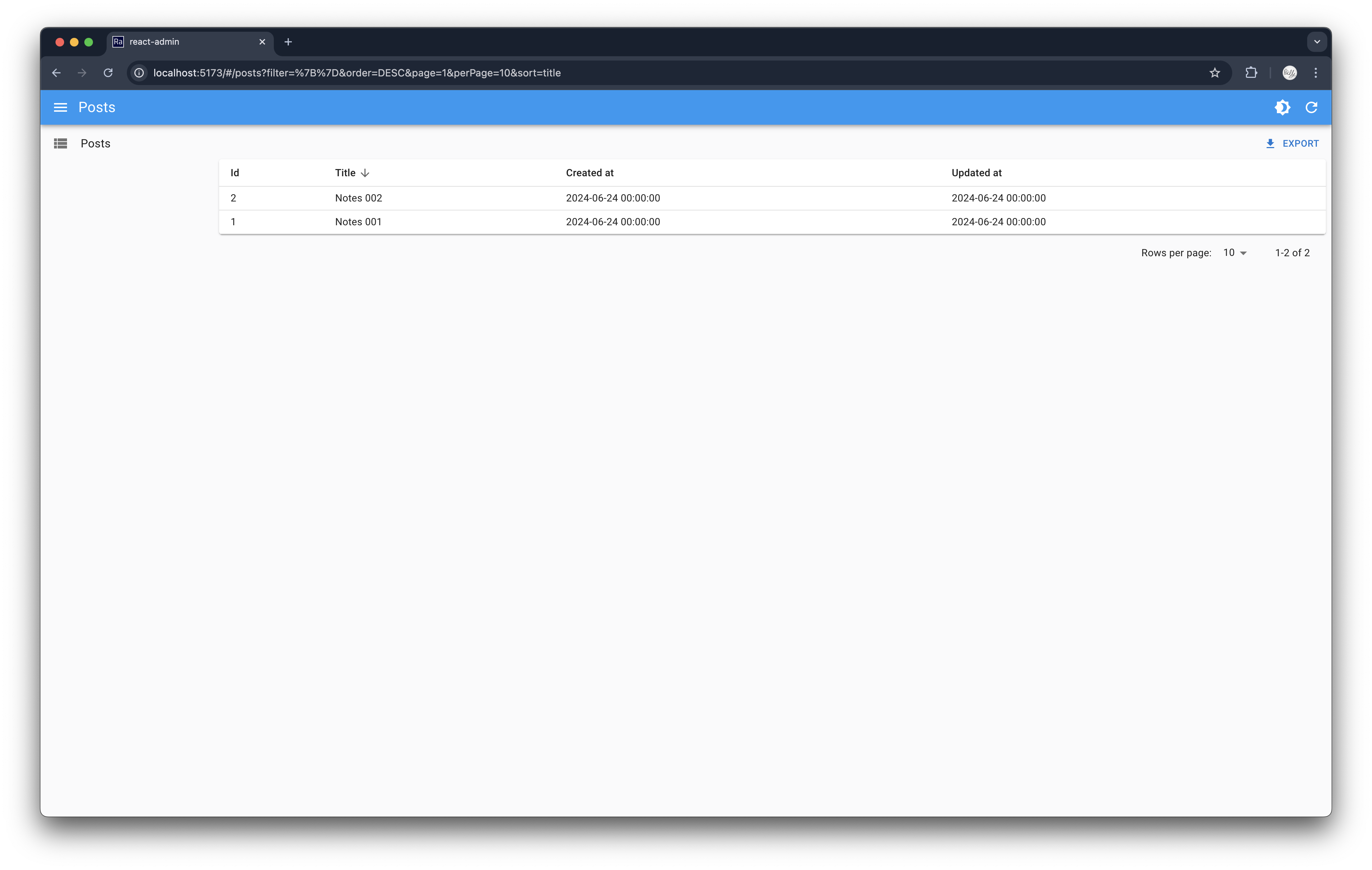

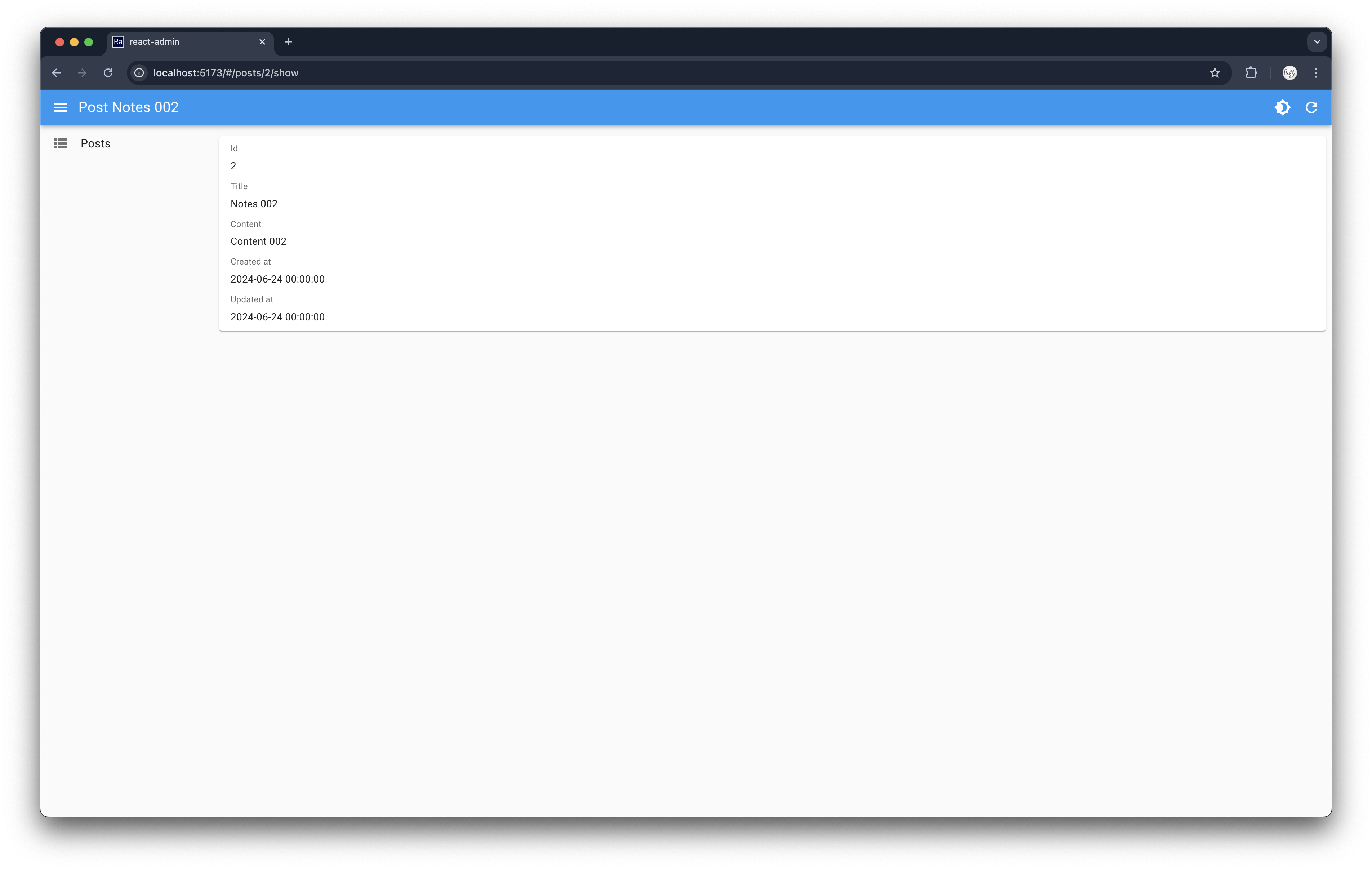

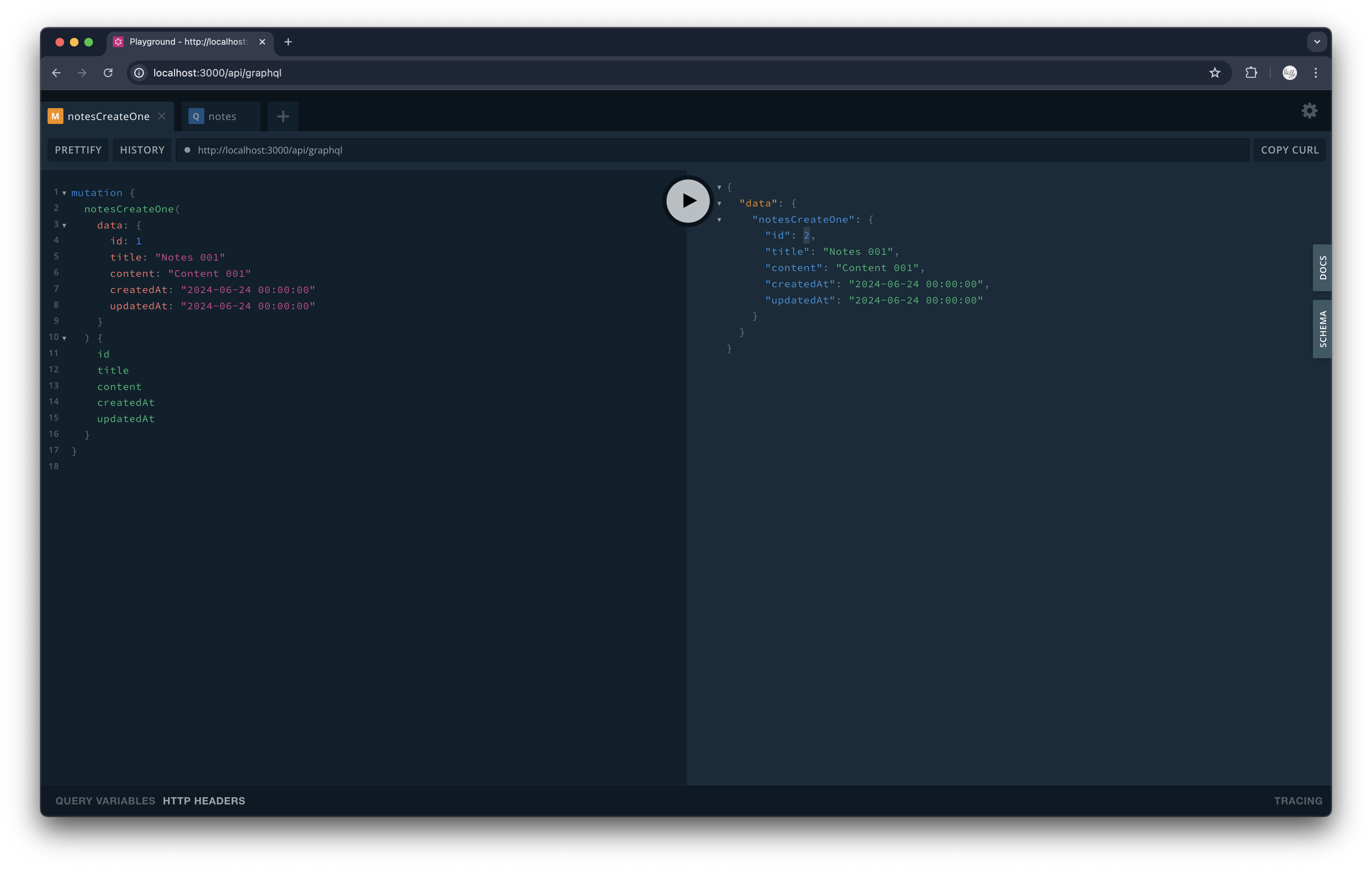

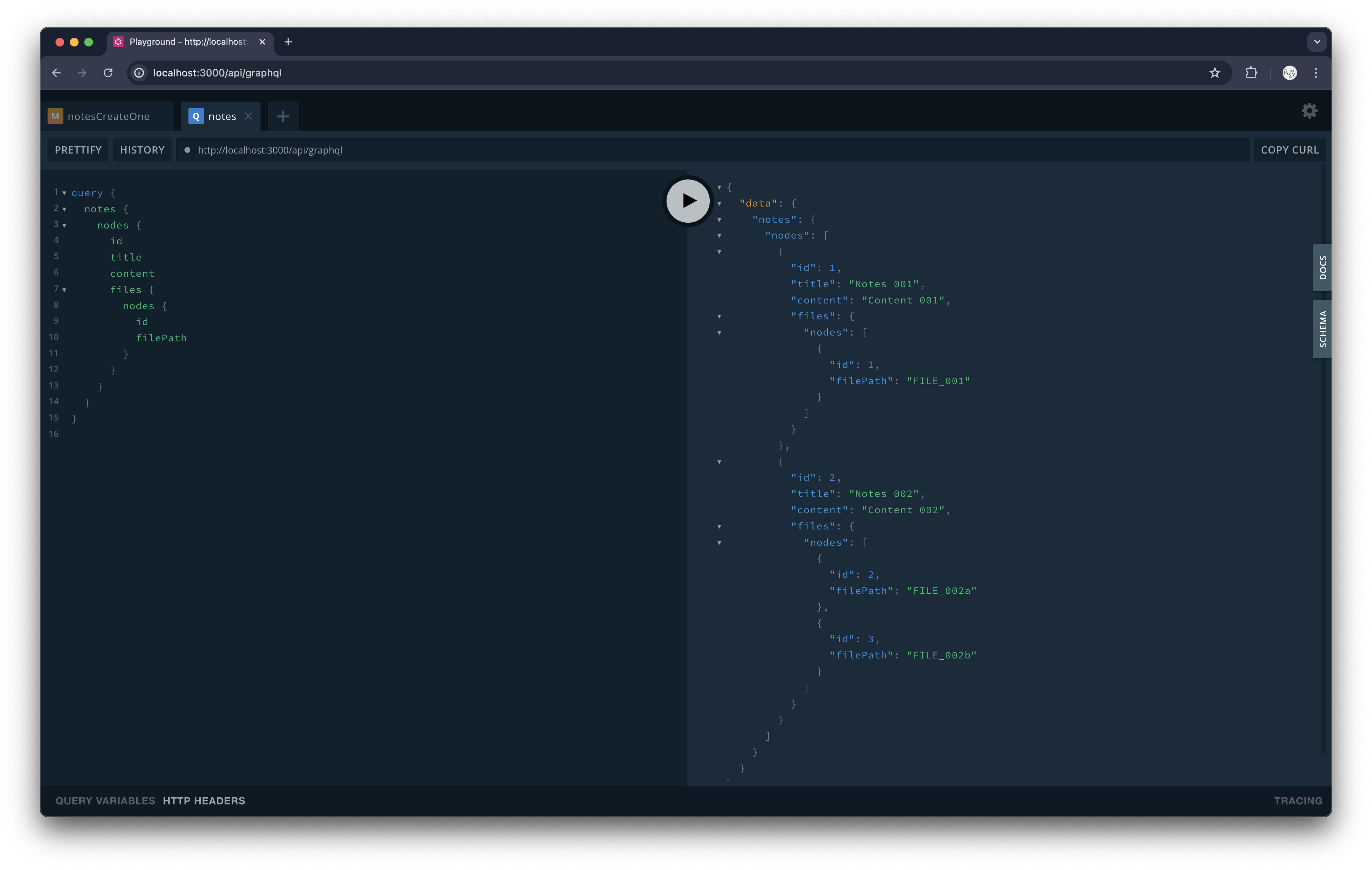

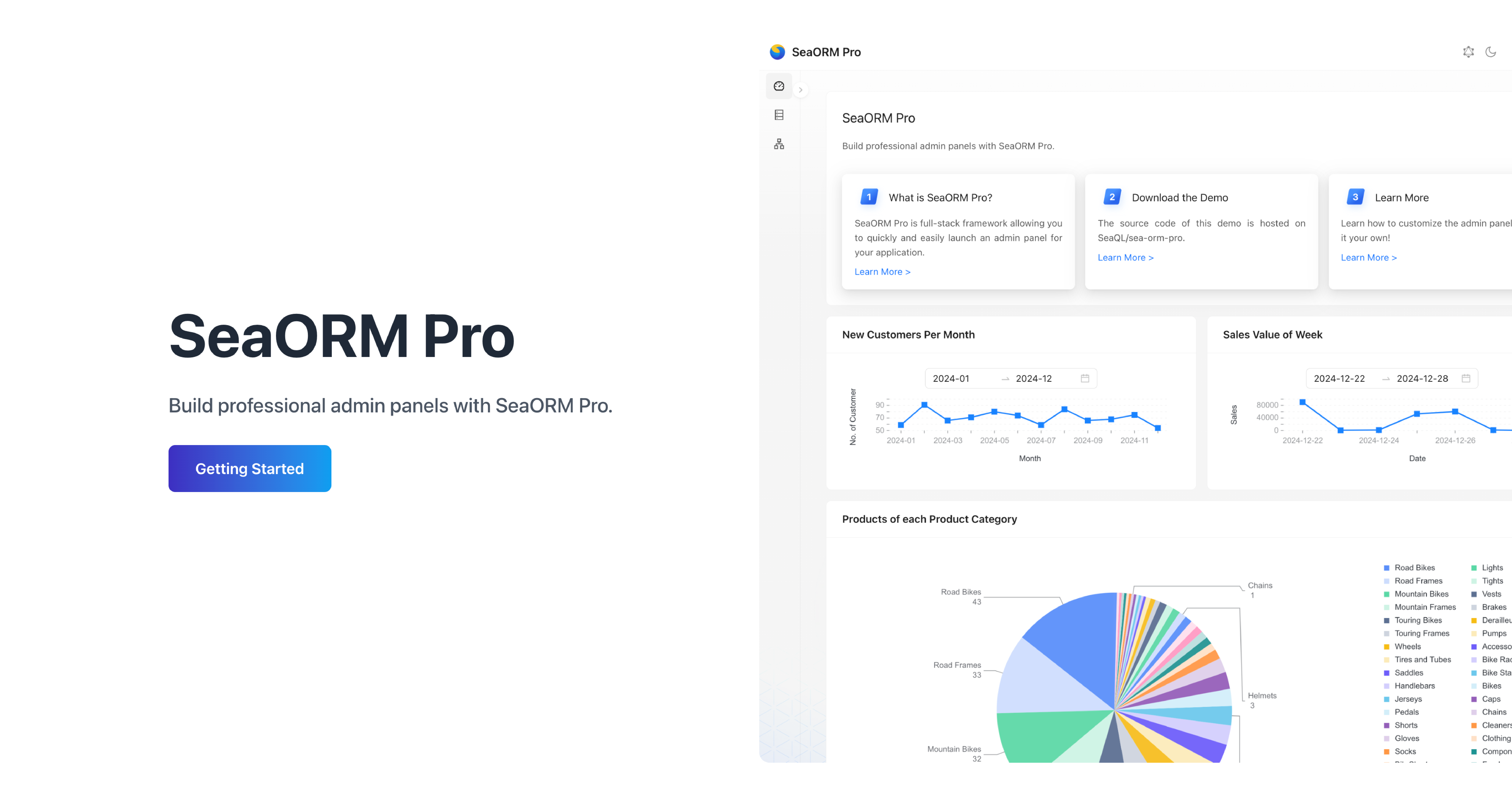

🖥️ SeaORM Pro: Professional Admin Panel

SeaORM Pro is an admin panel solution allowing you to quickly and easily launch an admin panel for your application - frontend development skills not required, but certainly nice to have!

Features:

- Full CRUD

- Built on React + GraphQL

- Built-in GraphQL resolver

- Customize the UI with simple TOML

- RBAC (coming soon with SeaORM 2.0)

More to come

SeaORM 2.0 is shaping up to be our most significant release yet - with a few breaking changes, plenty of enhancements, and a clear focus on developer experience. We'll unpack everything in the posts to come, so keep an eye out for the next update!

SeaORM 2.0 will launch alongside SeaQuery 1.0. If you make extensive use of SeaORM's underlying query builder, we recommend checking out our earlier blog post on SeaQuery 1.0 to get familiar with the changes.

If you have suggestions on breaking changes, you are welcome to post them in the discussions.

Sponsors

If you feel generous, a small donation will be greatly appreciated, and goes a long way towards sustaining the organization.

Gold Sponsor

QDX pioneers quantum dynamics–powered drug discovery, leveraging AI and supercomputing to accelerate molecular modeling. We're grateful to QDX for sponsoring the development of SeaORM, the SQL toolkit that powers their data intensive applications.

GitHub Sponsors

A big shout out to our GitHub sponsors 😇:

🦀 Rustacean Sticker Pack

The Rustacean Sticker Pack is the perfect way to express your passion for Rust. Our stickers are made with a premium water-resistant vinyl with a unique matte finish.

Sticker Pack Contents:

- Logo of SeaQL projects: SeaQL, SeaORM, SeaQuery, Seaography

- Mascots: Ferris the Crab x 3, Terres the Hermit Crab

- The Rustacean wordmark

Support SeaQL and get a Sticker Pack!