What's new in SeaORM 1.1.12

This blog post summarizes the new features and enhancements introduced in SeaORM 1.1:

New Features

Implement DeriveValueType for enum strings

DeriveValueType now supports enum types. It offers a simpler alternative to DeriveActiveEnum for client-side enums backed by string database types.

#[derive(DeriveValueType)]

#[sea_orm(value_type = "String")]

pub enum Tag {

Hard,

Soft,

}

// `from_str` defaults to `std::str::FromStr::from_str`

impl std::str::FromStr for Tag {

type Err = sea_orm::sea_query::ValueTypeErr;

fn from_str(s: &str) -> Result<Self, Self::Err> { .. }

}

// `to_str` defaults to `std::string::ToString::to_string`.

impl std::fmt::Display for Tag {

fn fmt(&self, f: &mut std::fmt::Formatter<'_>) -> std::fmt::Result { .. }

}

The following trait impl are generated, removing the boilerplate previously needed:

DeriveValueType expansion

#[automatically_derived]

impl std::convert::From<Tag> for sea_orm::Value {

fn from(source: Tag) -> Self {

std::string::ToString::to_string(&source).into()

}

}

#[automatically_derived]

impl sea_orm::TryGetable for Tag {

fn try_get_by<I: sea_orm::ColIdx>(res: &sea_orm::QueryResult, idx: I)

-> std::result::Result<Self, sea_orm::TryGetError> {

let string = String::try_get_by(res, idx)?;

std::str::FromStr::from_str(&string).map_err(|err| sea_orm::TryGetError::DbErr(sea_orm::DbErr::Type(format!("{err:?}"))))

}

}

#[automatically_derived]

impl sea_orm::sea_query::ValueType for Tag {

fn try_from(v: sea_orm::Value) -> std::result::Result<Self, sea_orm::sea_query::ValueTypeErr> {

let string = <String as sea_orm::sea_query::ValueType>::try_from(v)?;

std::str::FromStr::from_str(&string).map_err(|_| sea_orm::sea_query::ValueTypeErr)

}

fn type_name() -> std::string::String {

stringify!(Tag).to_owned()

}

fn array_type() -> sea_orm::sea_query::ArrayType {

sea_orm::sea_query::ArrayType::String

}

fn column_type() -> sea_orm::sea_query::ColumnType {

sea_orm::sea_query::ColumnType::String(sea_orm::sea_query::StringLen::None)

}

}

#[automatically_derived]

impl sea_orm::sea_query::Nullable for Tag {

fn null() -> sea_orm::Value {

sea_orm::Value::String(None)

}

}

You can override from_str and to_str with custom functions, which is especially useful if you're using strum::Display and strum::EnumString, or manually implemented methods:

#[derive(DeriveValueType)]

#[sea_orm(

value_type = "String",

from_str = "Tag::from_str",

to_str = "Tag::to_str"

)]

pub enum Tag {

Color,

Grey,

}

impl Tag {

fn from_str(s: &str) -> Result<Self, ValueTypeErr> { .. }

fn to_str(&self) -> &'static str { .. }

}

Support Postgres IpNetwork

#2395 (under feature flag with-ipnetwork)

// Model

#[derive(Clone, Debug, PartialEq, Eq, DeriveEntityModel)]

#[sea_orm(table_name = "host_network")]

pub struct Model {

#[sea_orm(primary_key)]

pub id: i32,

pub ipaddress: IpNetwork,

#[sea_orm(column_type = "Cidr")]

pub network: IpNetwork,

}

// Schema

sea_query::Table::create()

.table(host_network::Entity)

.col(ColumnDef::new(host_network::Column::Id).integer().not_null().auto_increment().primary_key())

.col(ColumnDef::new(host_network::Column::Ipaddress).inet().not_null())

.col(ColumnDef::new(host_network::Column::Network).cidr().not_null())

.to_owned();

// CRUD

host_network::ActiveModel {

ipaddress: Set(IpNetwork::new(Ipv6Addr::new(..))),

network: Set(IpNetwork::new(Ipv4Addr::new(..))),

..Default::default()

}

Added default_values to ActiveModelTrait

The ActiveModel::default() returns ActiveModel { .. NotSet } by default (it can also be overridden).

We've added a new method default_values() which would set all fields to their actual Default::default() values.

This fills in a gap in the type system to help with serde. A real-world use case is to improve ActiveModel::from_json, an upcoming new feature (which is a breaking change, sadly).

#[derive(DeriveEntityModel)]

#[sea_orm(table_name = "fruit")]

pub struct Model {

#[sea_orm(primary_key)]

pub id: i32,

pub name: String,

pub cake_id: Option<i32>,

pub type_without_default: active_enums::Tea,

}

assert_eq!(

fruit::ActiveModel::default_values(),

fruit::ActiveModel {

id: Set(0), // integer

name: Set("".into()), // string

cake_id: Set(None), // option

type_without_default: NotSet, // not available

},

);

If you are interested in how this works under the hood, a new method Value::dummy_value is added in SeaQuery:

use sea_orm::sea_query::Value;

let v = Value::Int(None);

let n = v.dummy_value();

assert_eq!(n, Value::Int(Some(0)));

The real magic happens with a set of new traits, DefaultActiveValue, DefaultActiveValueNone and DefaultActiveValueNotSet, and taking advantage of Rust's autoref specialization mechanism used by anyhow:

use sea_orm::value::{DefaultActiveValue, DefaultActiveValueNone, DefaultActiveValueNotSet};

let v = (&ActiveValue::<i32>::NotSet).default_value();

assert_eq!(v, ActiveValue::Set(0));

let v = (&ActiveValue::<Option<i32>>::NotSet).default_value();

assert_eq!(v, ActiveValue::Set(None));

let v = (&ActiveValue::<String>::NotSet).default_value();

assert_eq!(v, ActiveValue::Set("".to_owned()));

let v = (&ActiveValue::<Option<String>>::NotSet).default_value();

assert_eq!(v, ActiveValue::Set(None));

let v = (&ActiveValue::<TimeDateTime>::NotSet).default_value();

assert!(matches!(v, ActiveValue::Set(_)));

This enables progressive enhancements based on the traits of the individual ActiveValue type.

Make sea-orm-cli & sea-orm-migration dependencies optional

Some engineering teams prefer vendoring sea-orm-cli into their own project as part of the cargo workspace, and so would like to have more control of the dependency graph. This change makes it possible to pick the exact features needed by your project.

Enhancements

- Impl

IntoConditionforRelationDef#2587

This allows usingRelationDefdirectly where the query API expects anIntoCondition

let query = Query::select()

.from(fruit::Entity)

.inner_join(cake::Entity, fruit::Relation::Cake.def())

.to_owned();

assert_eq!(

query.to_string(MysqlQueryBuilder),

r#"SELECT FROM `fruit` INNER JOIN `cake` ON `fruit`.`cake_id` = `cake`.`id`"#

);

assert_eq!(

query.to_string(PostgresQueryBuilder),

r#"SELECT FROM "fruit" INNER JOIN "cake" ON "fruit"."cake_id" = "cake"."id""#

);

assert_eq!(

query.to_string(SqliteQueryBuilder),

r#"SELECT FROM "fruit" INNER JOIN "cake" ON "fruit"."cake_id" = "cake"."id""#

);

- Use fully-qualified syntax for ActiveEnum associated type #2552

- Added

try_getable_postgres_array!(Vec<u8>)(to supportbytea[]) #2503 - Accept

LikeExprinlikeandnot_like#2549 - Loader: retain only unique key values in the query condition #2569

- Add proxy transaction impl #2573

- Relax

TransactionError's trait bound for errors to allowanyhow::Error#2602 - [sea-orm-cli] Fix

PgVectorcodegen #2589 - [sea-orm-cli] Support postgres array in expanded format #2545

Bug fixes

- Quote type properly in

AsEnumcasting #2570

assert_eq!(

lunch_set::Entity::find()

.select_only()

.column(lunch_set::Column::Tea)

.build(DbBackend::Postgres)

.to_string(),

r#"SELECT CAST("lunch_set"."tea" AS "text") FROM "lunch_set""#

// "text" is now quoted; will work for "text"[] as well

);

- Include custom

column_namein DeriveColumnColumn::from_strimpl #2603

#[derive(DeriveEntityModel)]

pub struct Model {

#[sea_orm(column_name = "lAsTnAmE")]

last_name: String,

}

assert!(matches!(Column::from_str("lAsTnAmE").unwrap(), Column::LastName));

- Check if url is well-formed before parsing (avoid panic) #2558

let db = Database::connect("postgre://sea:sea@localhost/bakery").await?;

// note the missing `s`; results in `DbErr::Conn`

QuerySelect::column_asmethod cast ActiveEnum column #2551

#[derive(Debug, FromQueryResult, PartialEq)]

struct SelectResult {

tea_alias: Option<Tea>,

}

assert_eq!(

SelectResult {

tea_alias: Some(Tea::EverydayTea),

},

Entity::find()

.select_only()

.column_as(Column::Tea, "tea_alias")

.into_model()

.one(db)

.await?

.unwrap()

);

- Fix unicode string enum #2218

#[derive(Debug, Clone, PartialEq, Eq, EnumIter, DeriveActiveEnum)]

#[sea_orm(rs_type = "String", db_type = "String(StringLen::None)")]

pub enum SeaORM {

#[sea_orm(string_value = "씨오알엠")]

씨오알엠,

}

Upgrades

- Upgrade sqlx to 0.8.4 #2562

- Upgrade

sea-queryto0.32.5 - Upgrade

sea-schemato0.16.2 - Upgrade

heckto0.5#2218

House Keeping

- Replace

once_cellcrate withstdequivalent #2524 (available since rust 1.80)

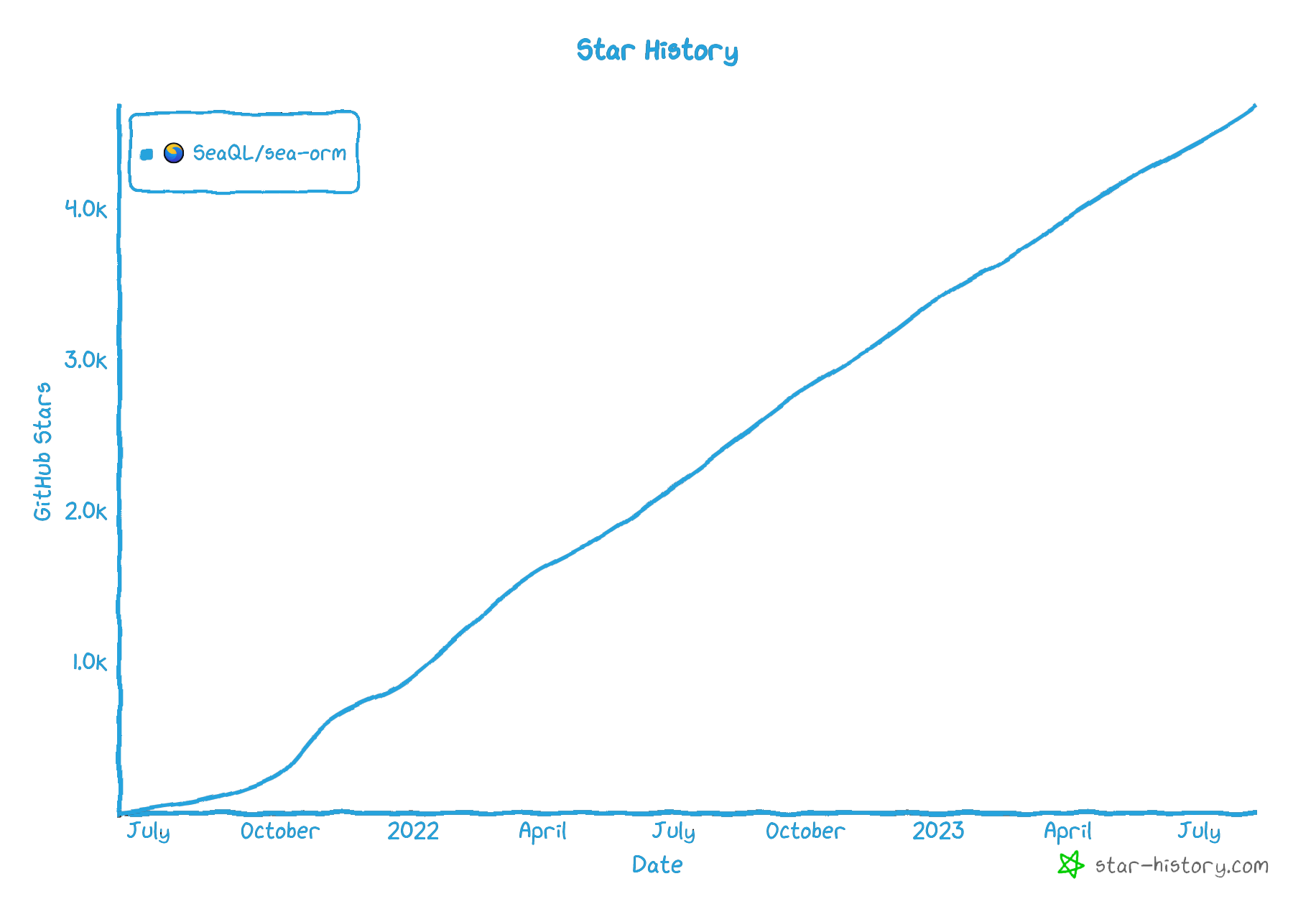

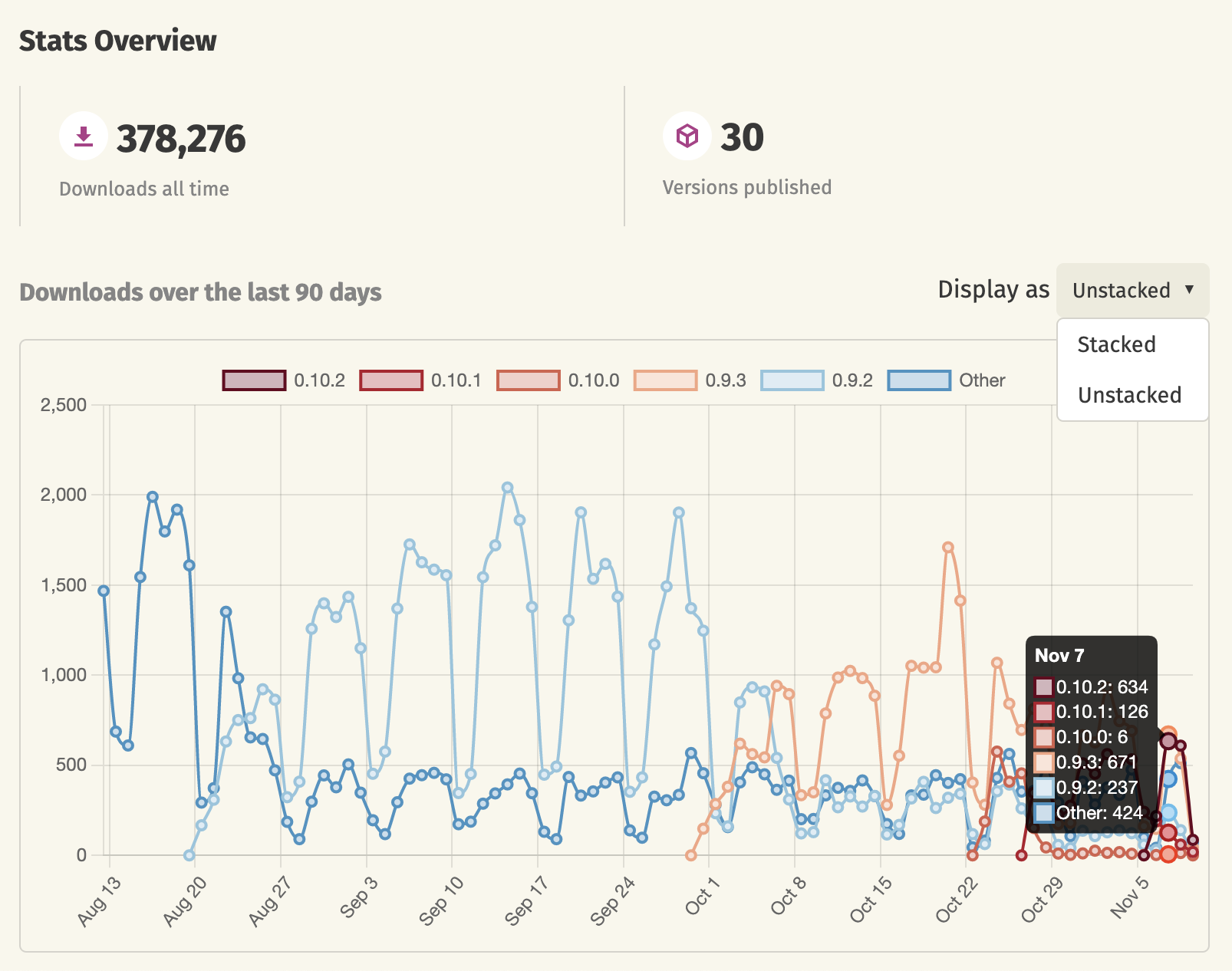

Release Planning

SeaORM 1.0 is a stable release. As demonstrated, we are able to ship many new features without breaking the API. The 1.1 version will be maintained until October 2025, and we'll likely release a 1.2 version with some breaking changes afterwards.

The underlying library SeaQuery will undergo an overhaul and be promoted to 1.0.

If you have suggestions on breaking changes, you are welcome to post them in the discussions:

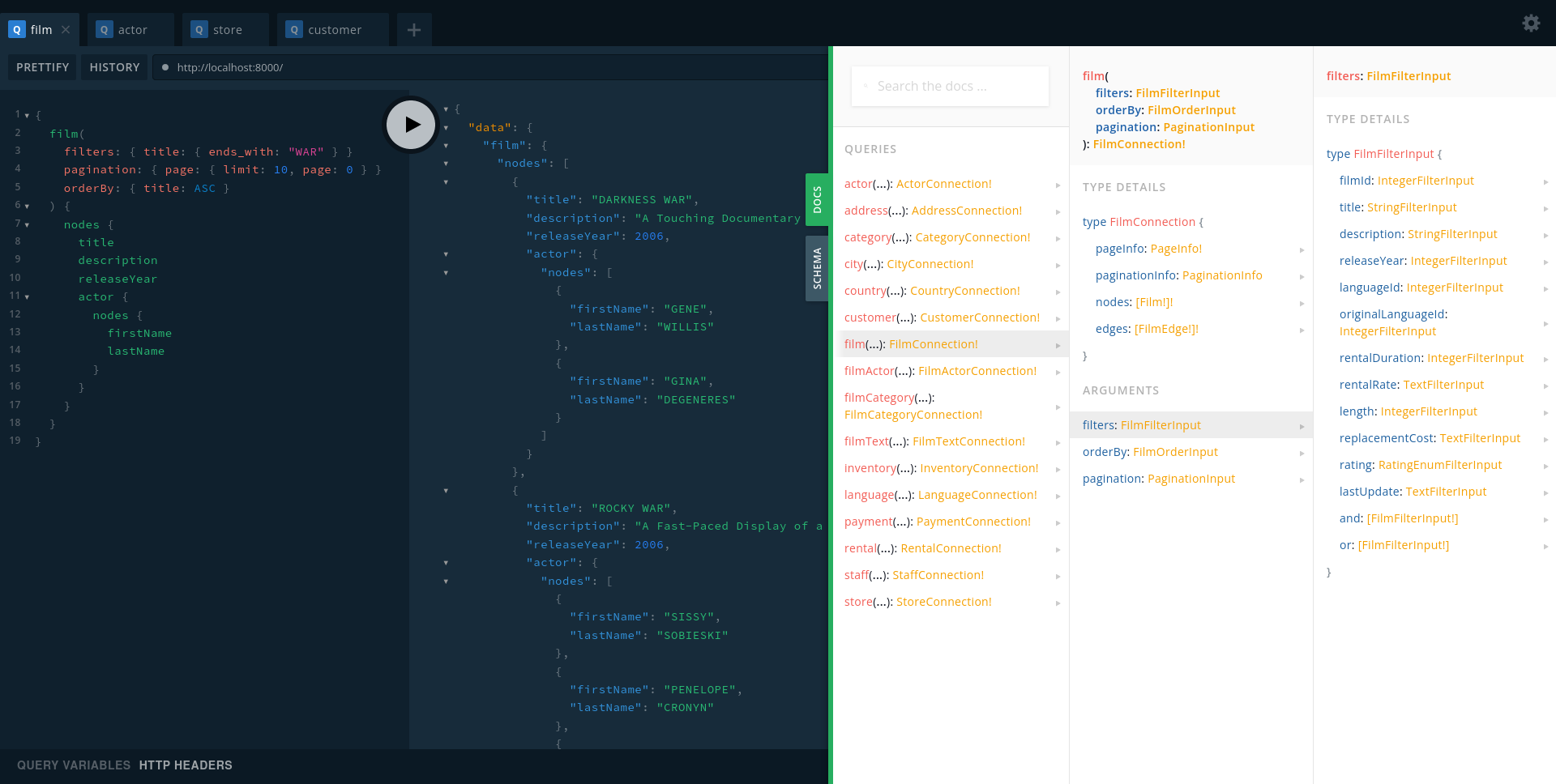

SQL Server Support

We've been beta-testing SQL Server for SeaORM for a while. SeaORM X offers the same SeaORM API for MSSQL. We ported all test cases and most examples, complemented by MSSQL specific documentation. If you are building enterprise software for your company, you can request commercial access.

Features:

- SeaQuery + SeaSchema

- Entity generation with sea-orm-cli

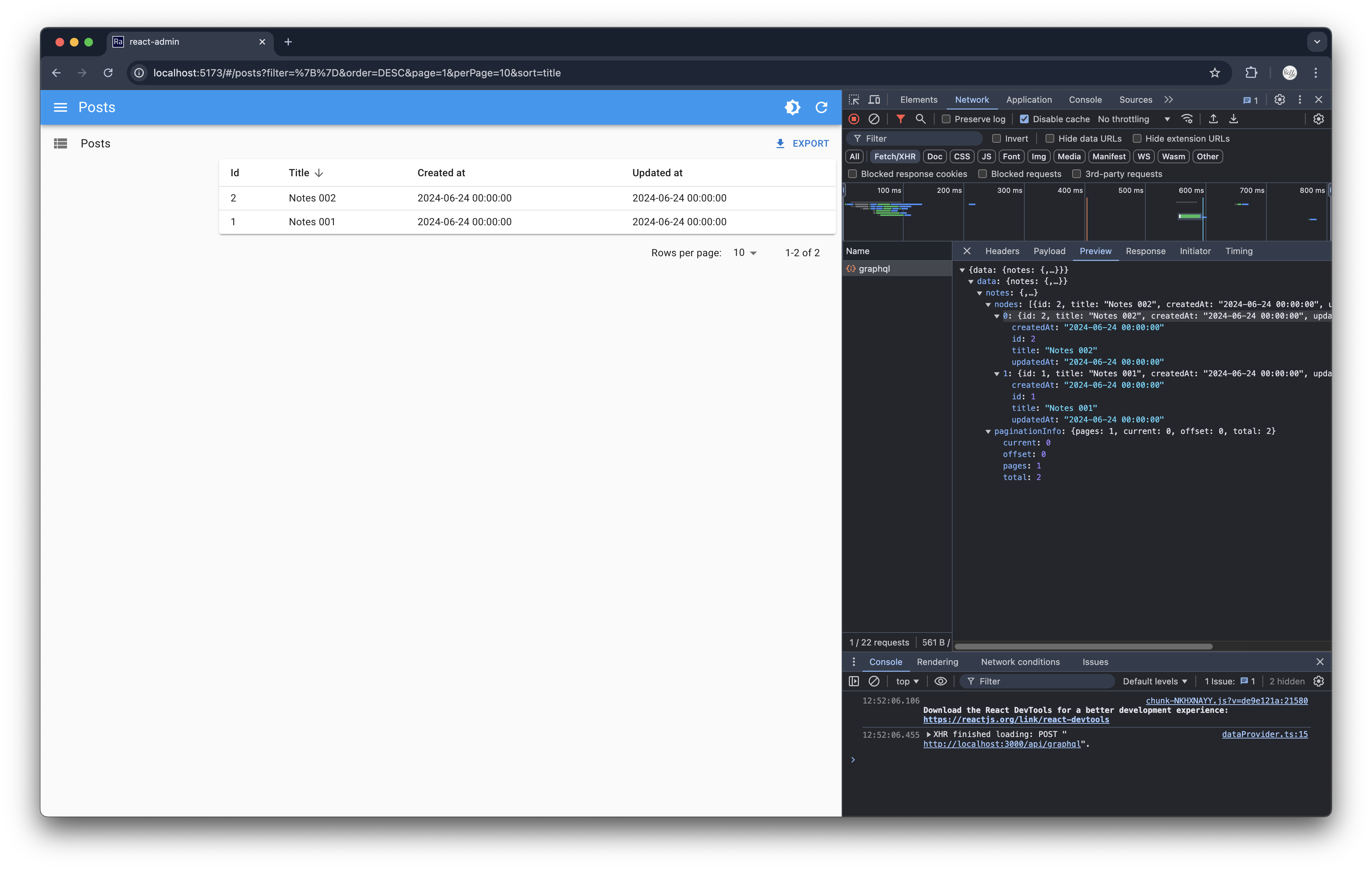

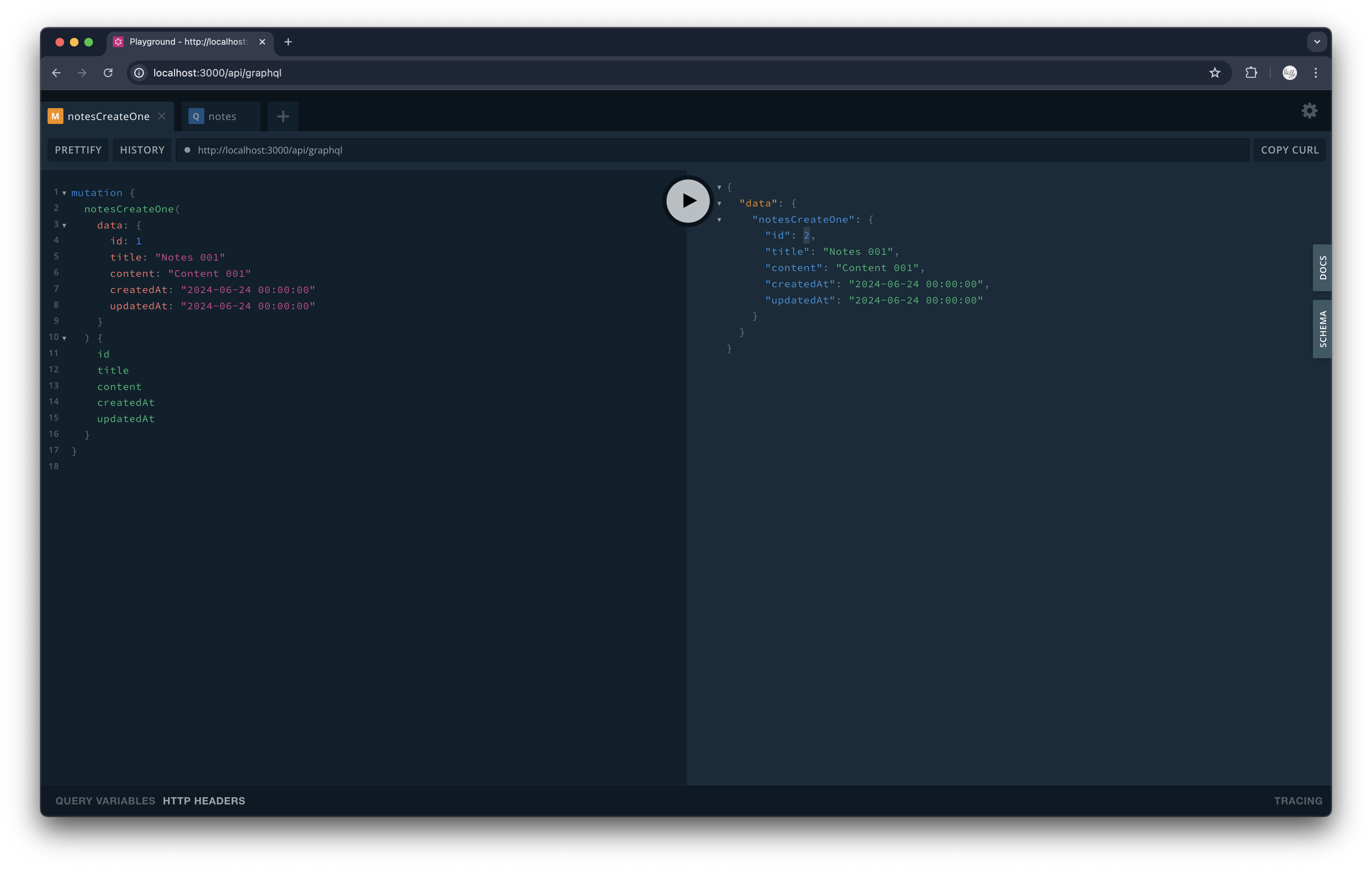

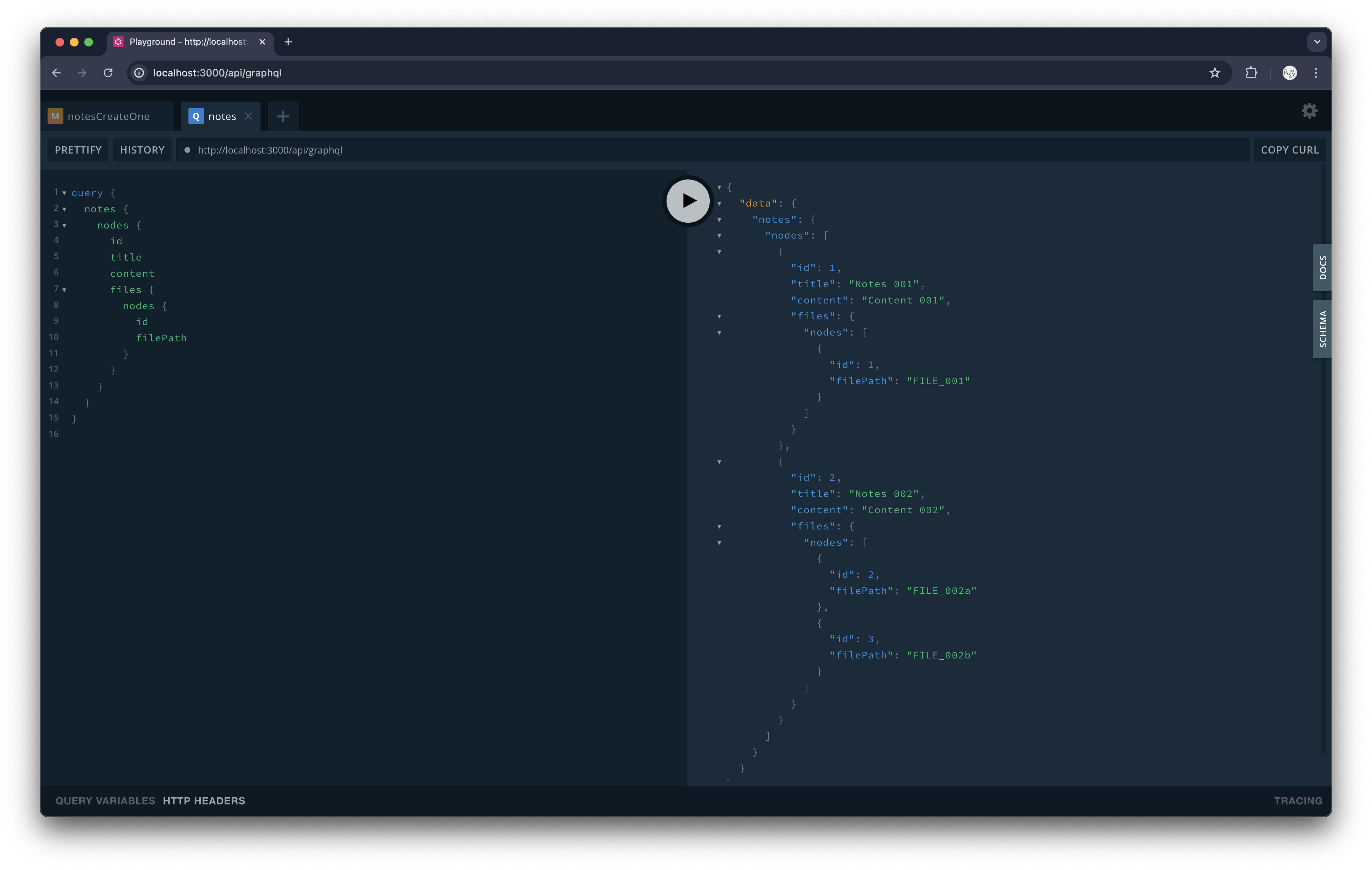

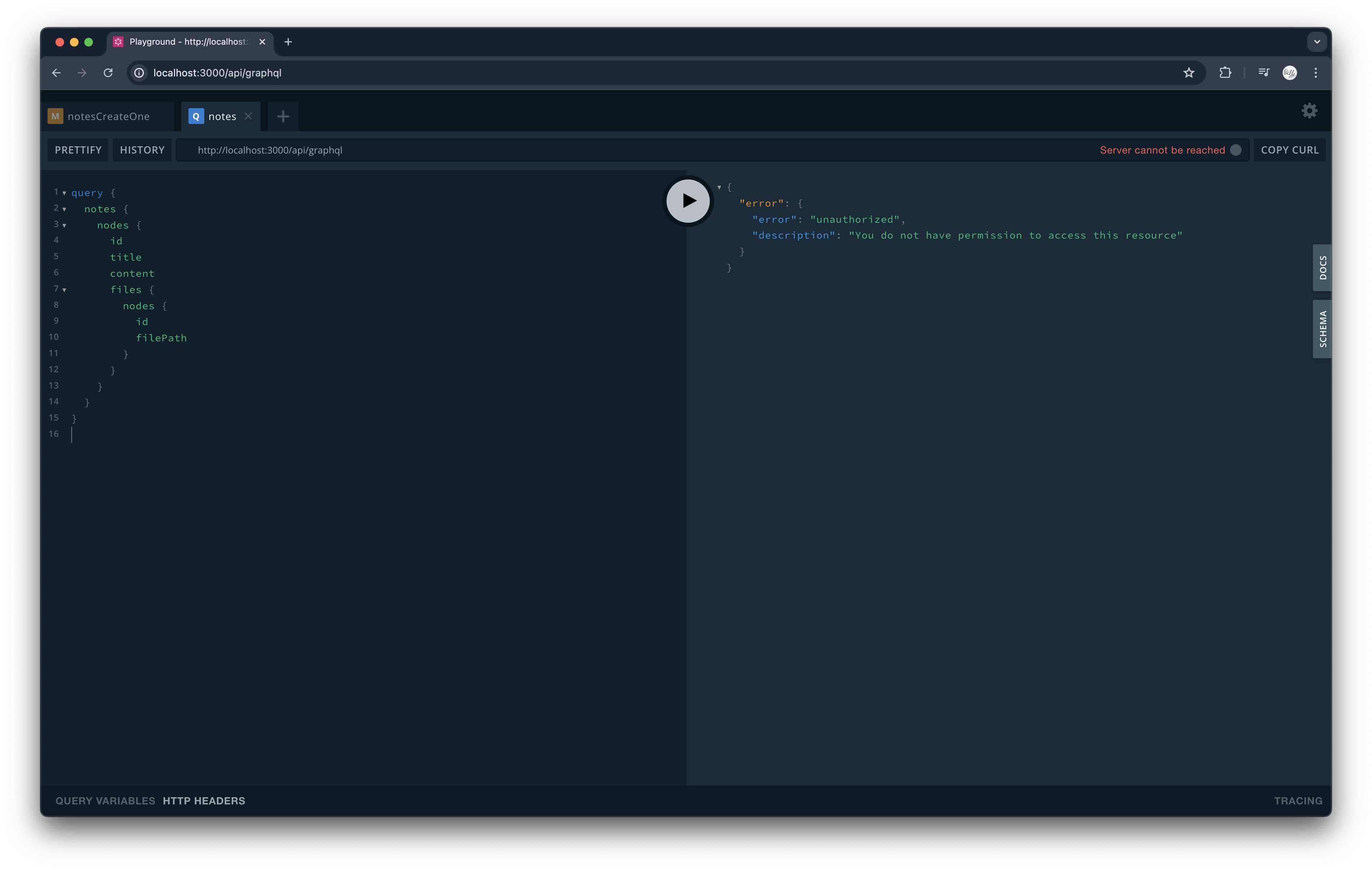

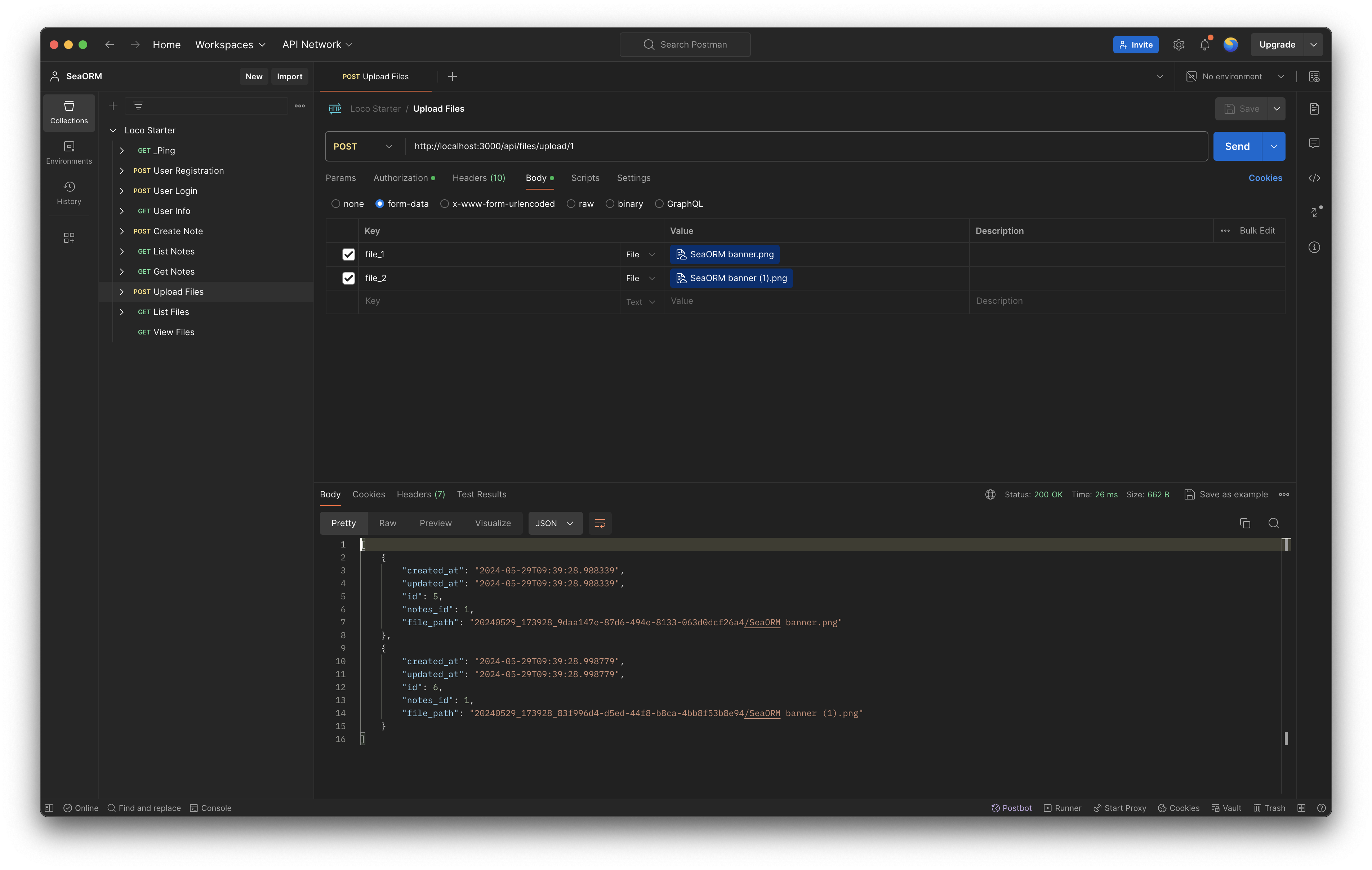

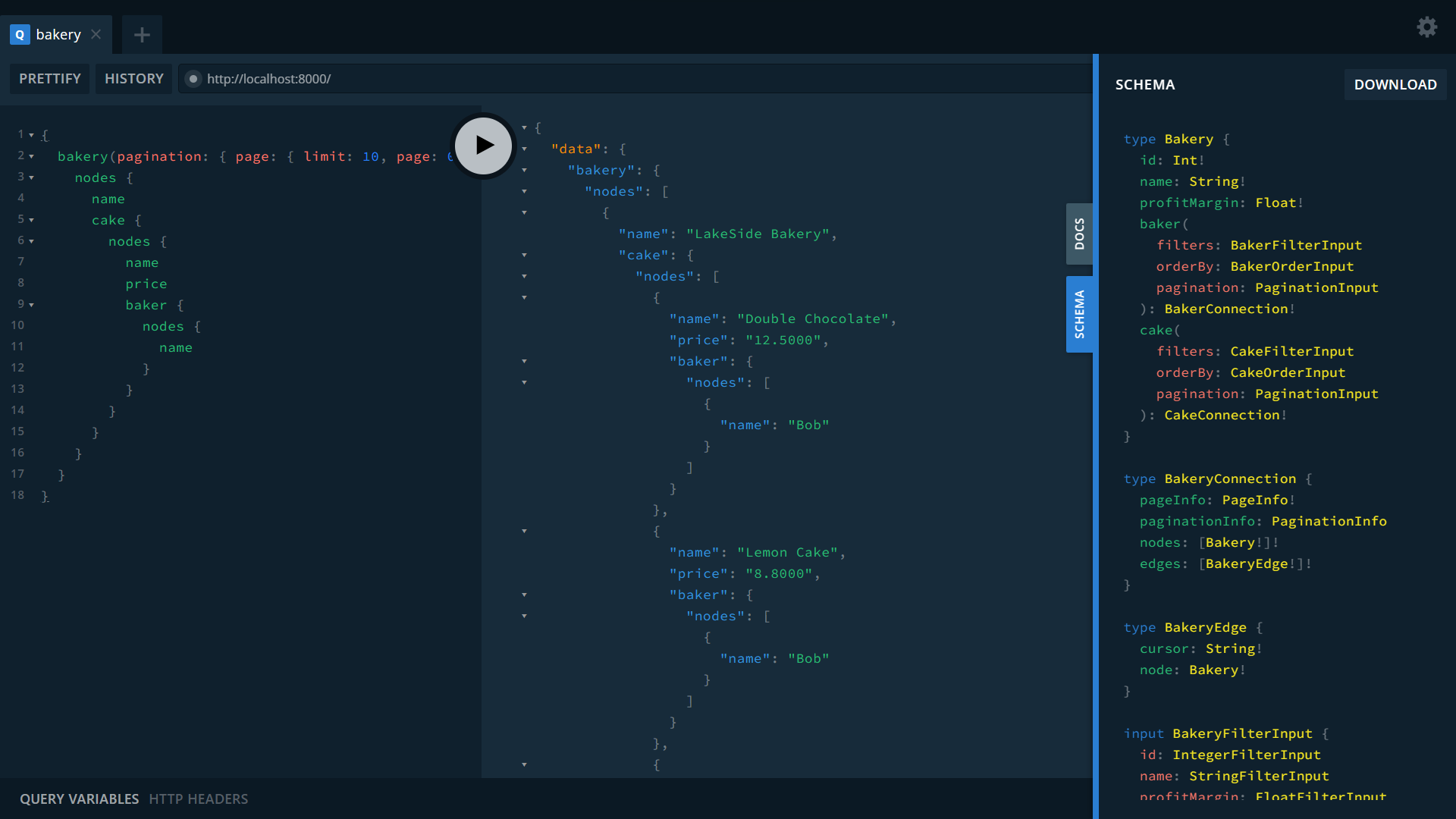

- GraphQL with Seaography

- Nested transaction, connection pooling and multi-async runtime

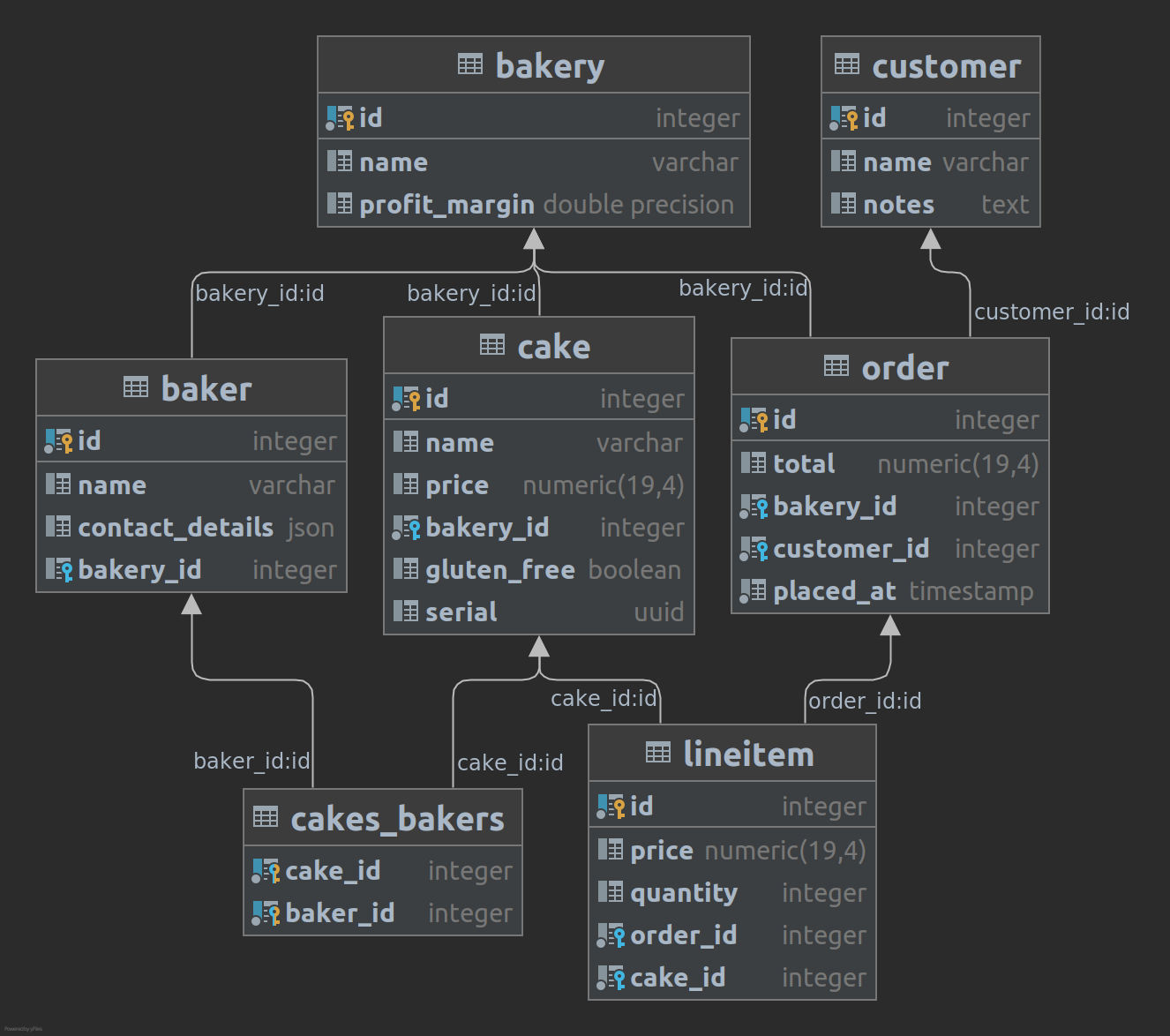

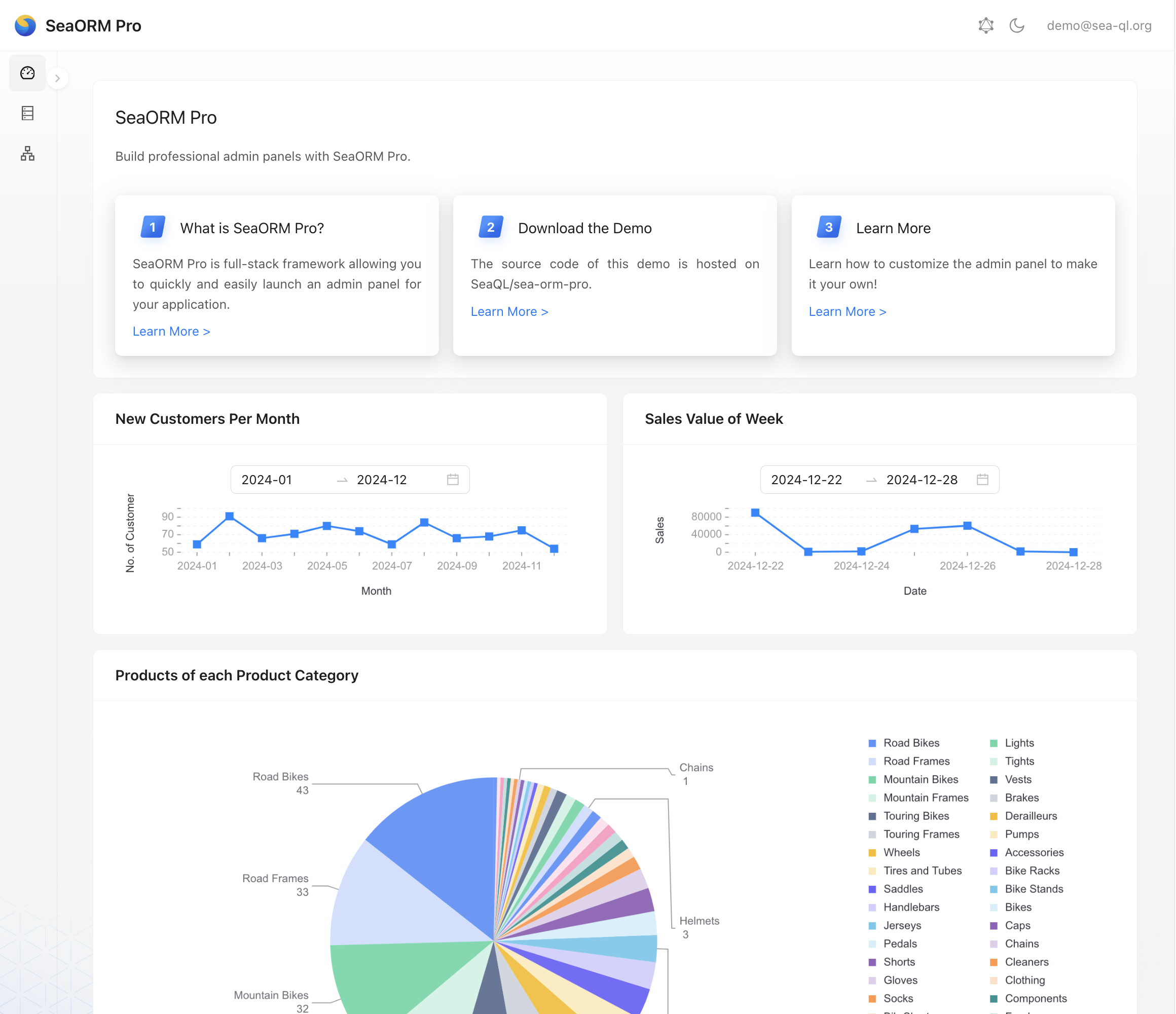

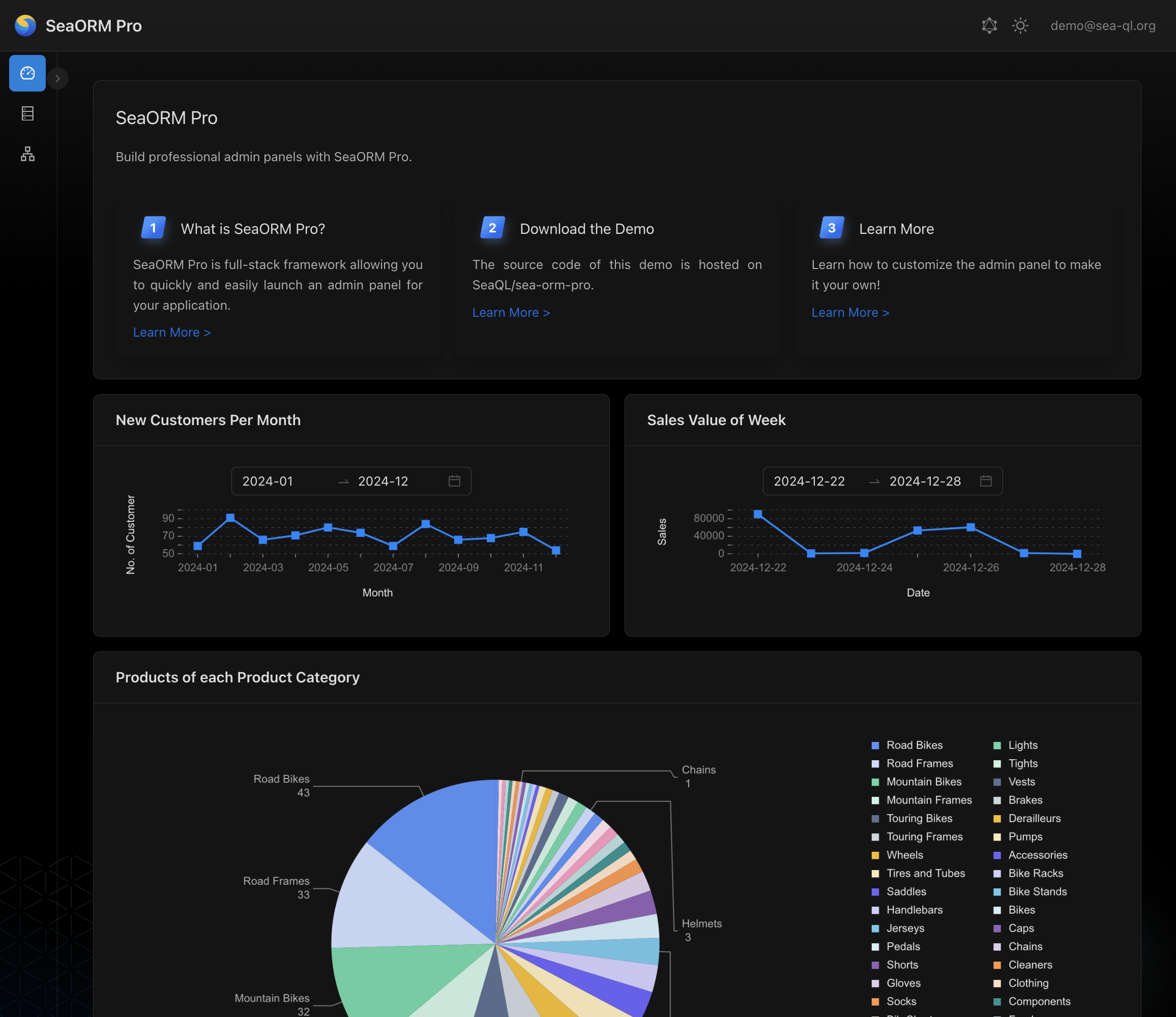

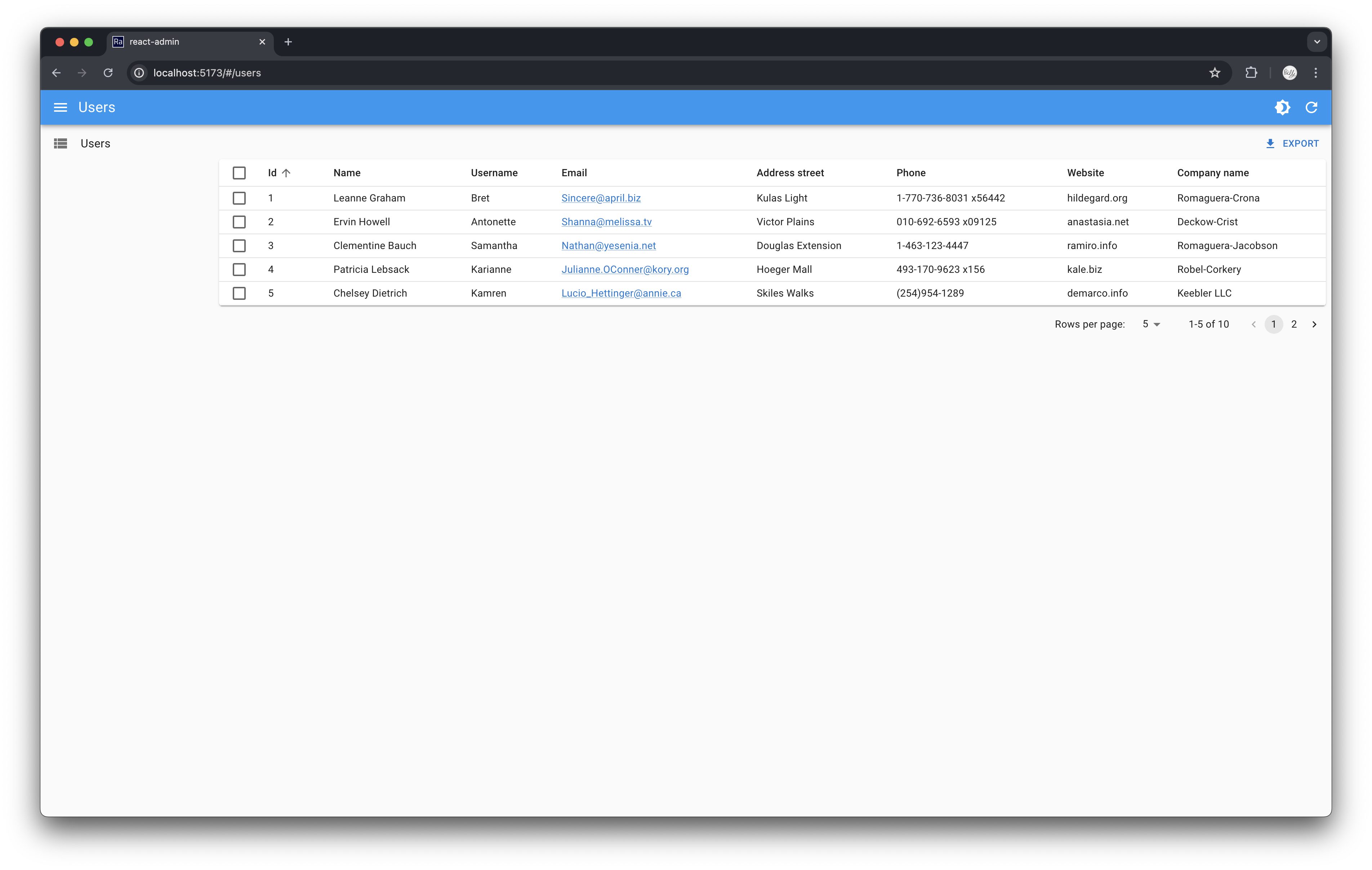

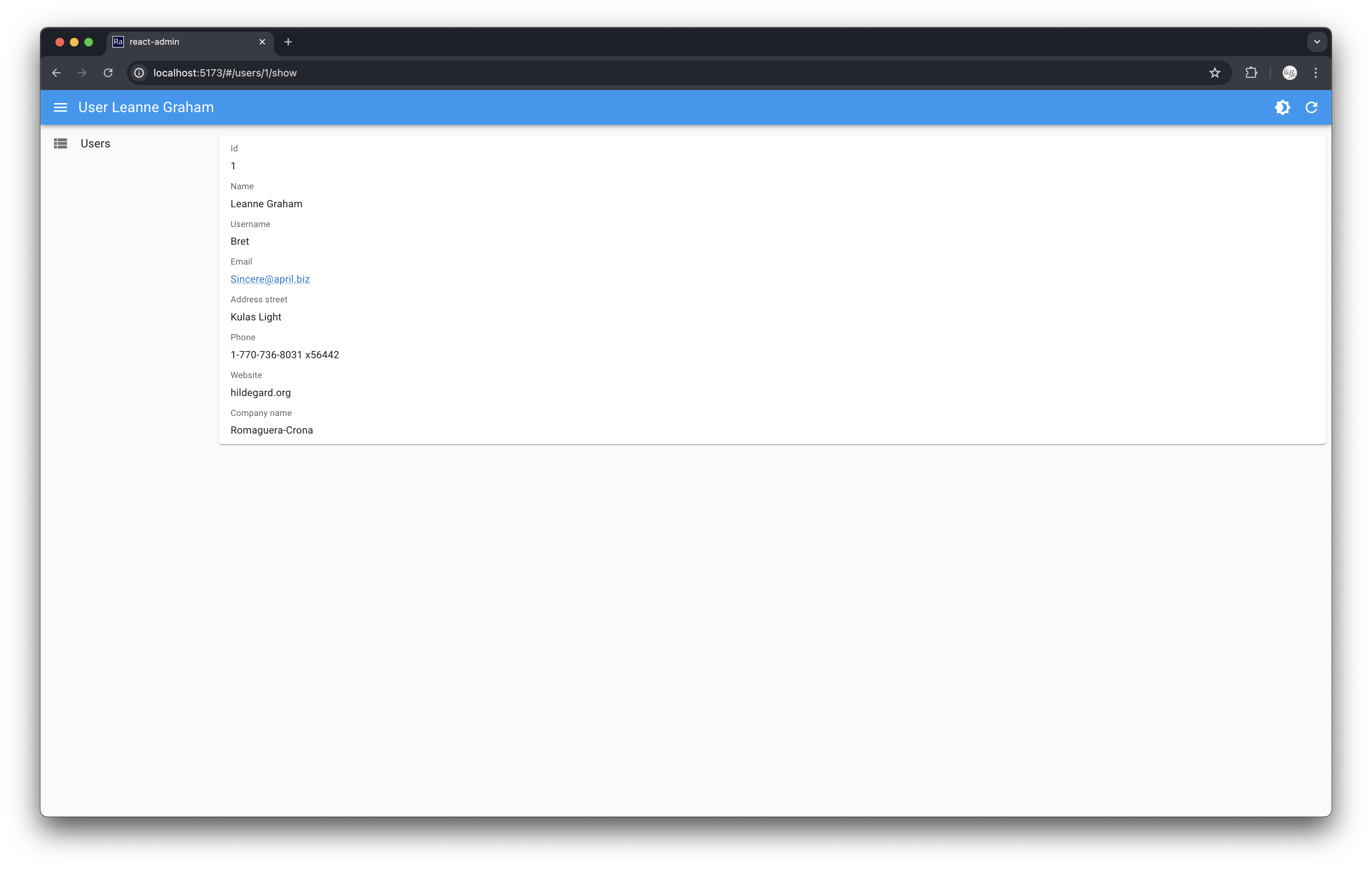

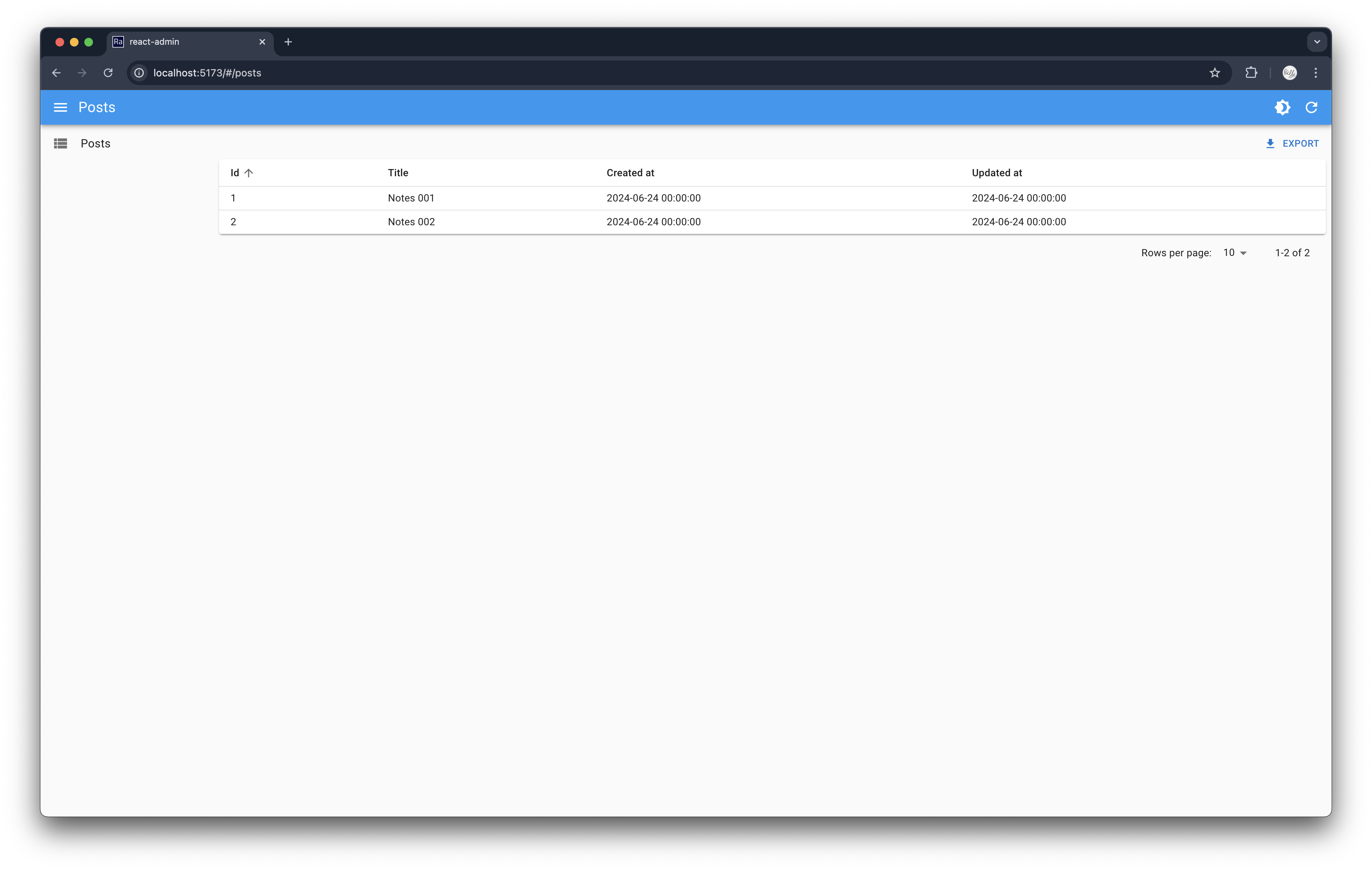

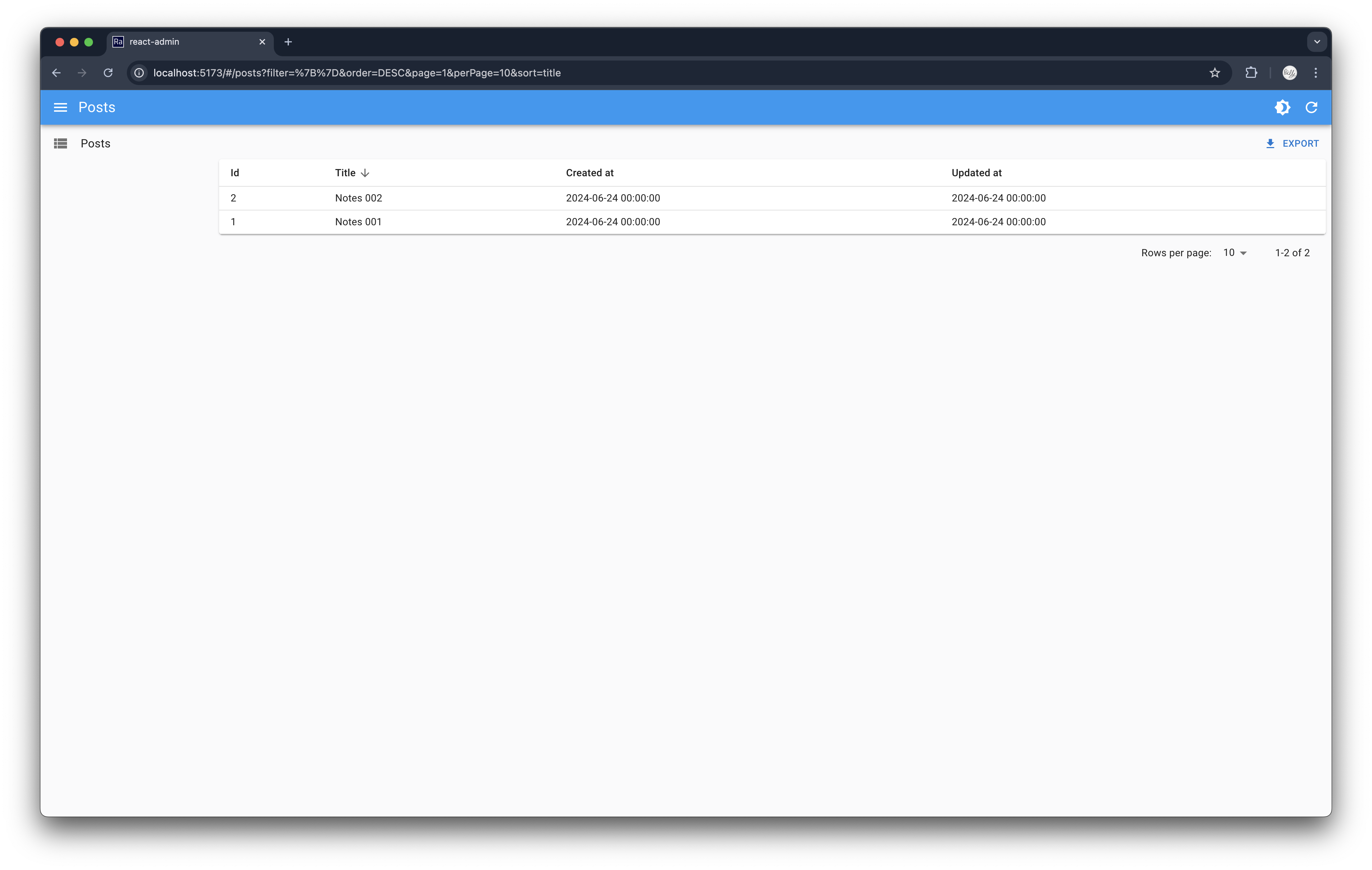

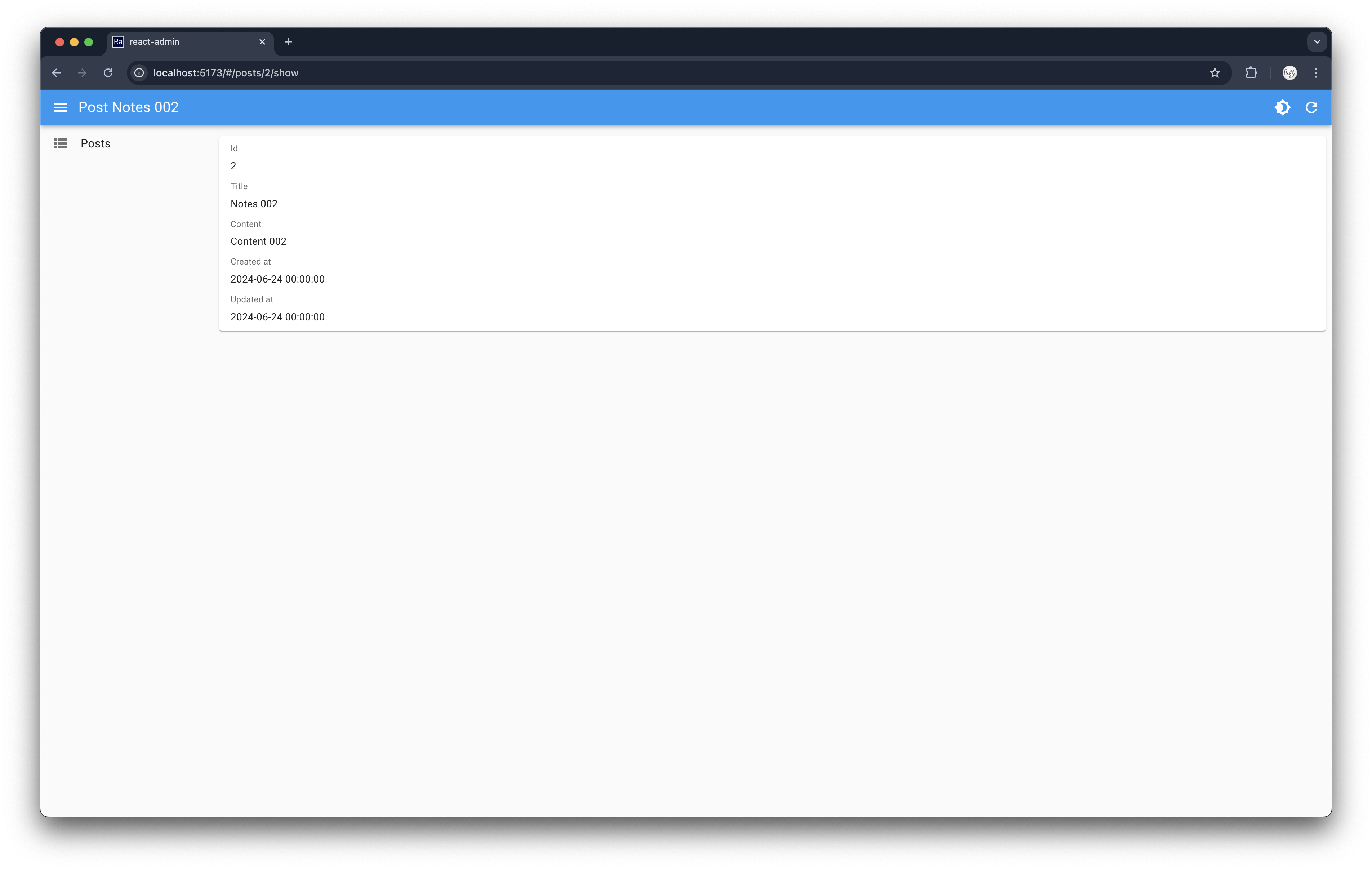

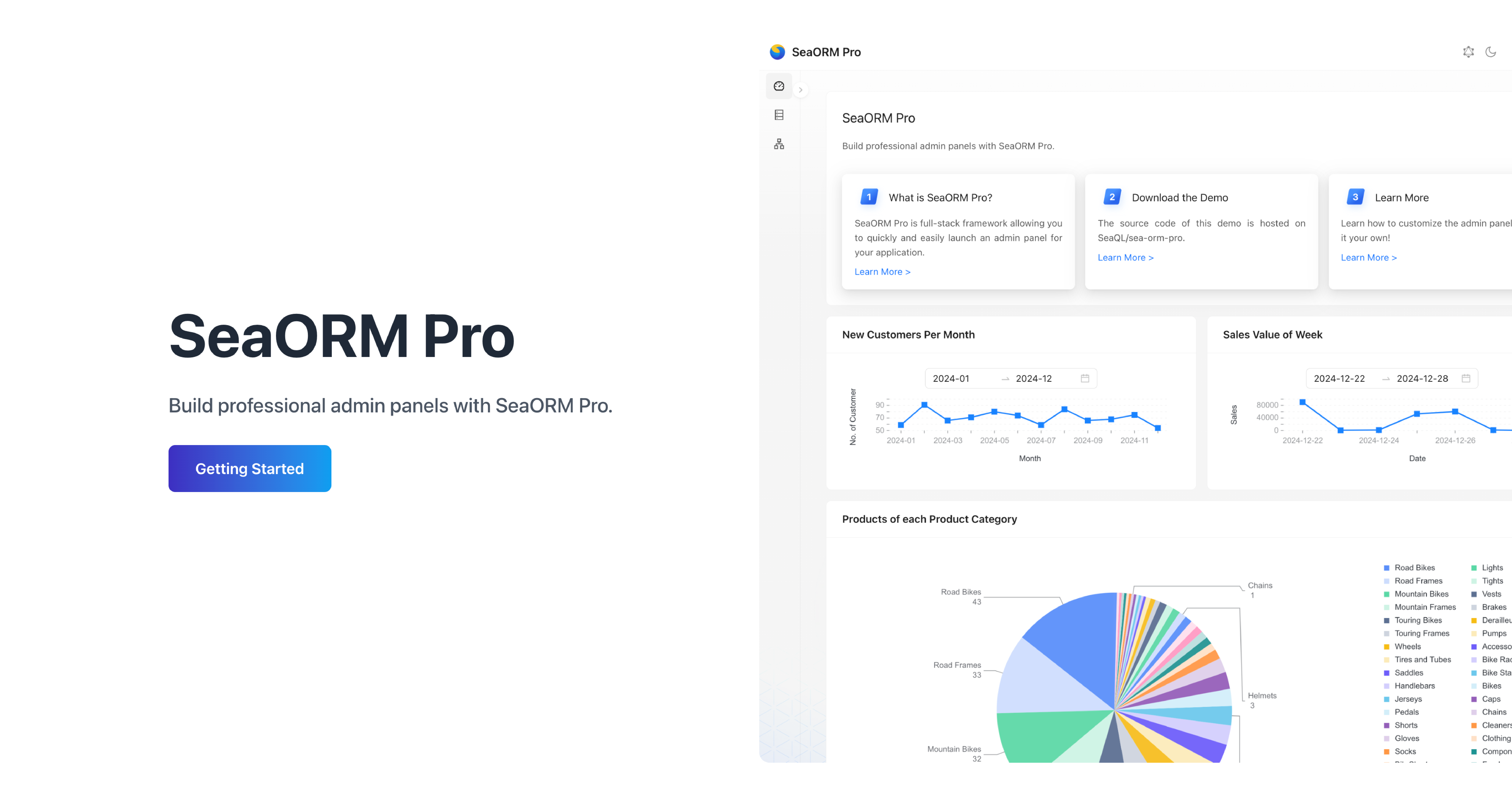

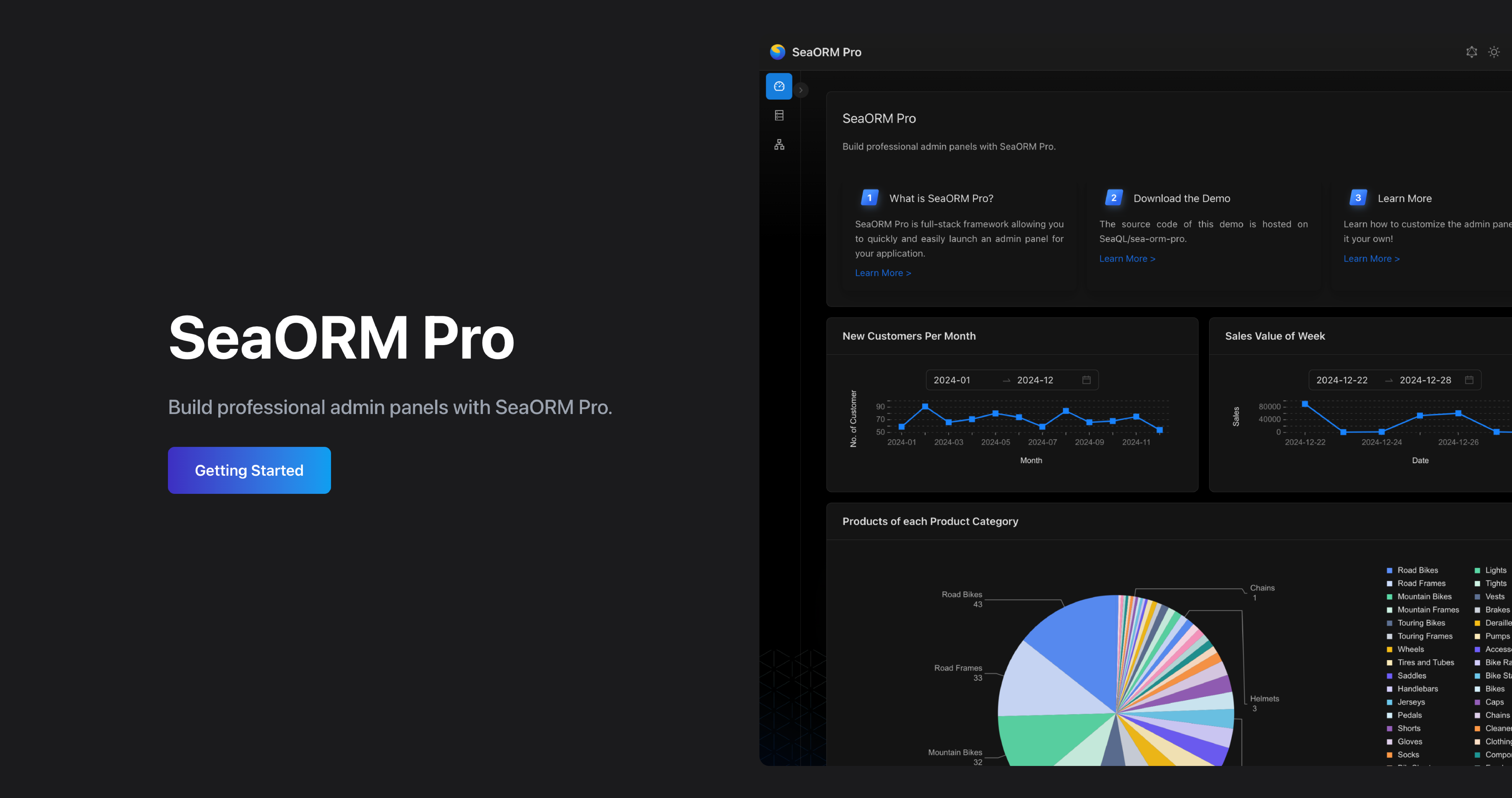

🖥️ SeaORM Pro: Professional Admin Panel

SeaORM Pro is an admin panel solution allowing you to quickly and easily launch an admin panel for your application - frontend development skills not required, but certainly nice to have!

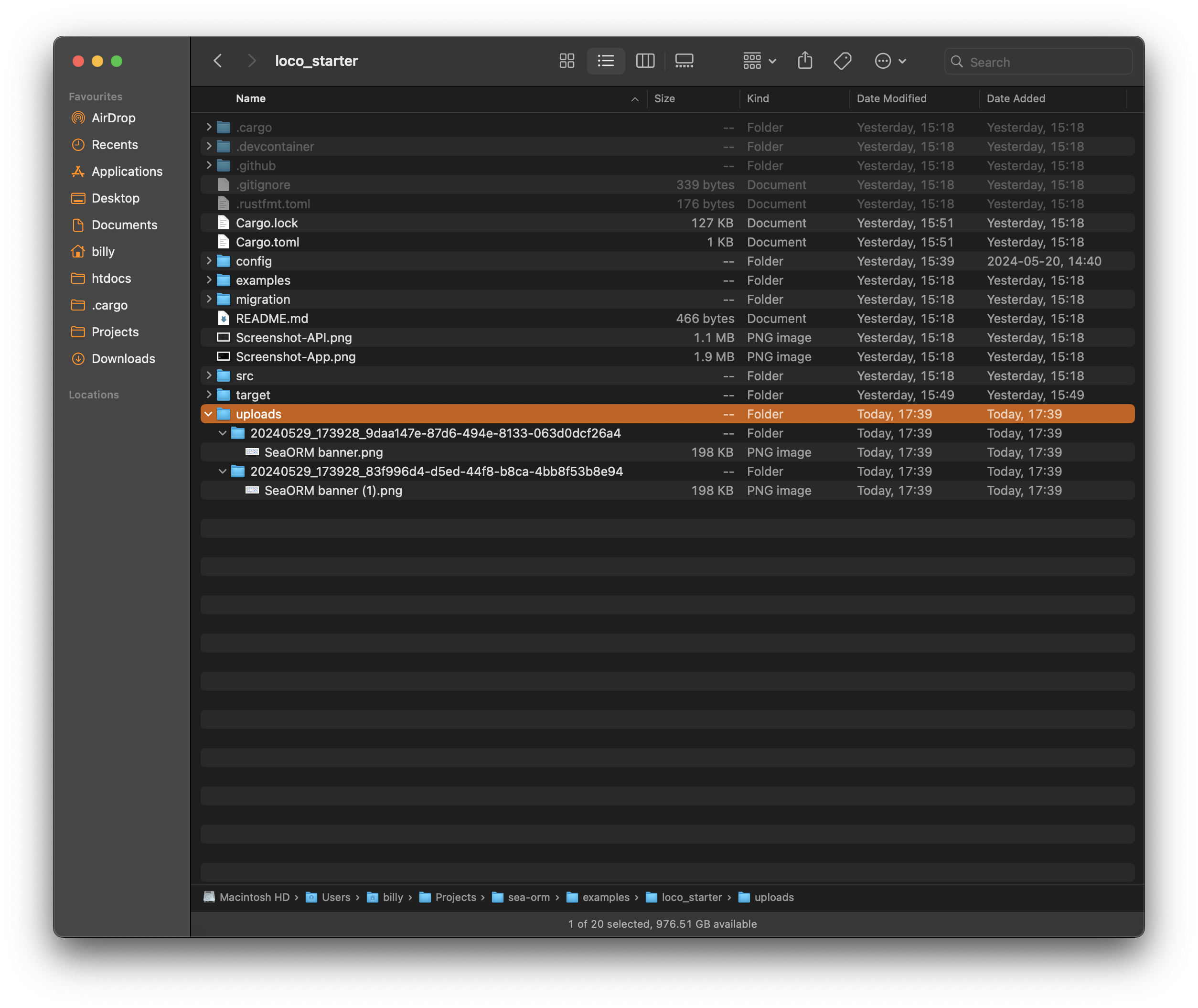

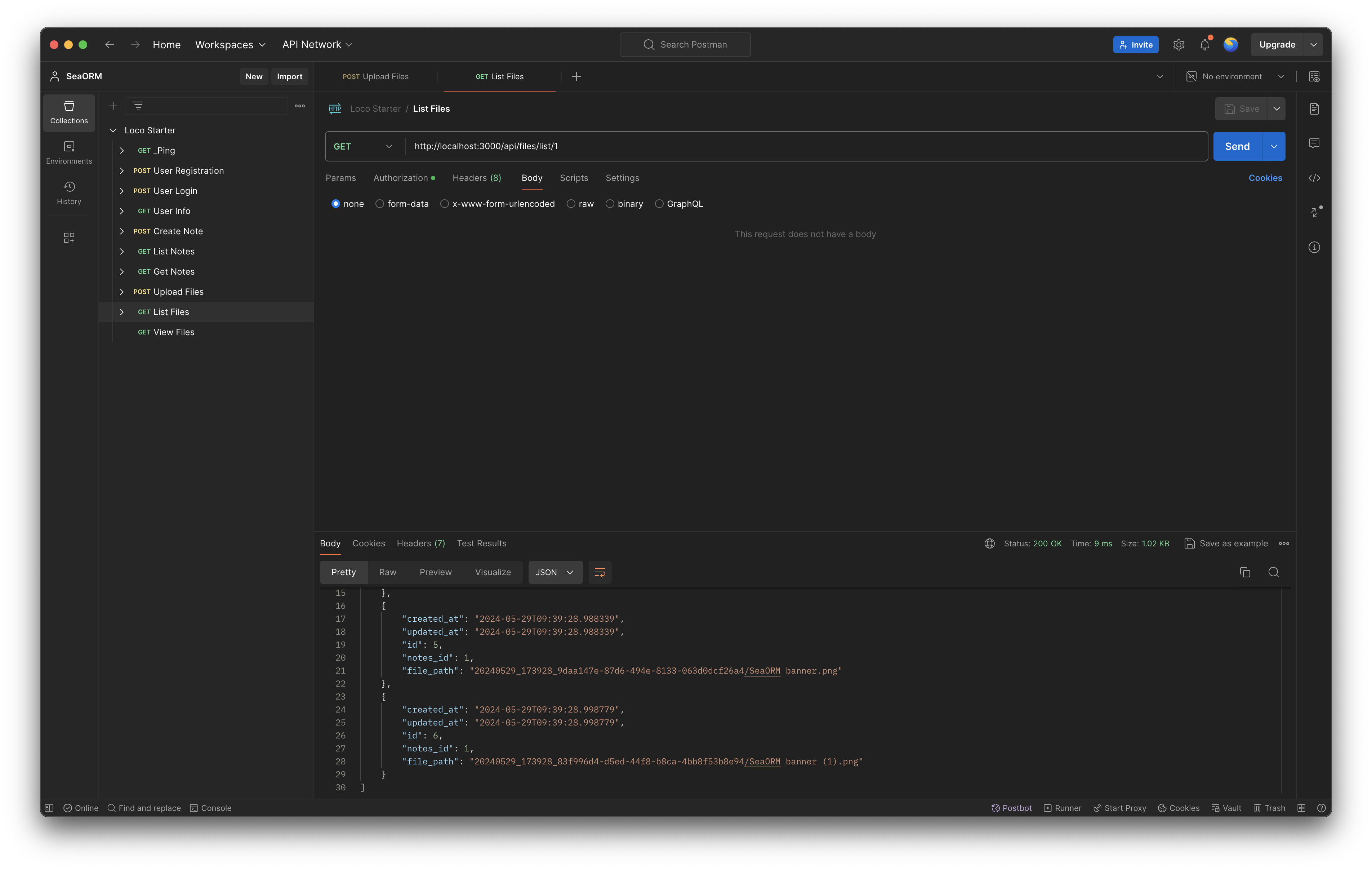

Features:

- Full CRUD

- Built on React + GraphQL

- Built-in GraphQL resolver

- Customize the UI with simple TOML

Getting Started

Latest features

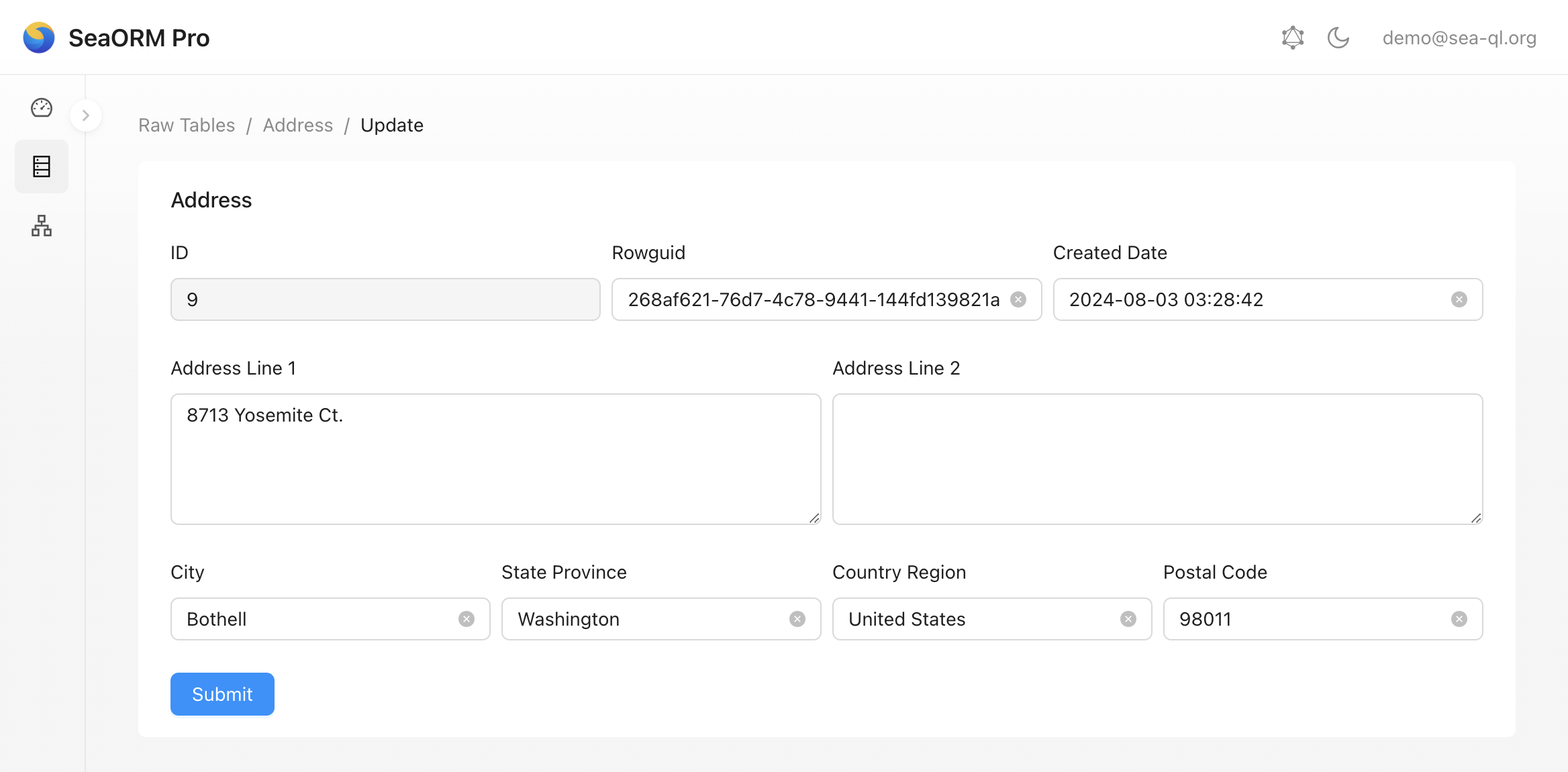

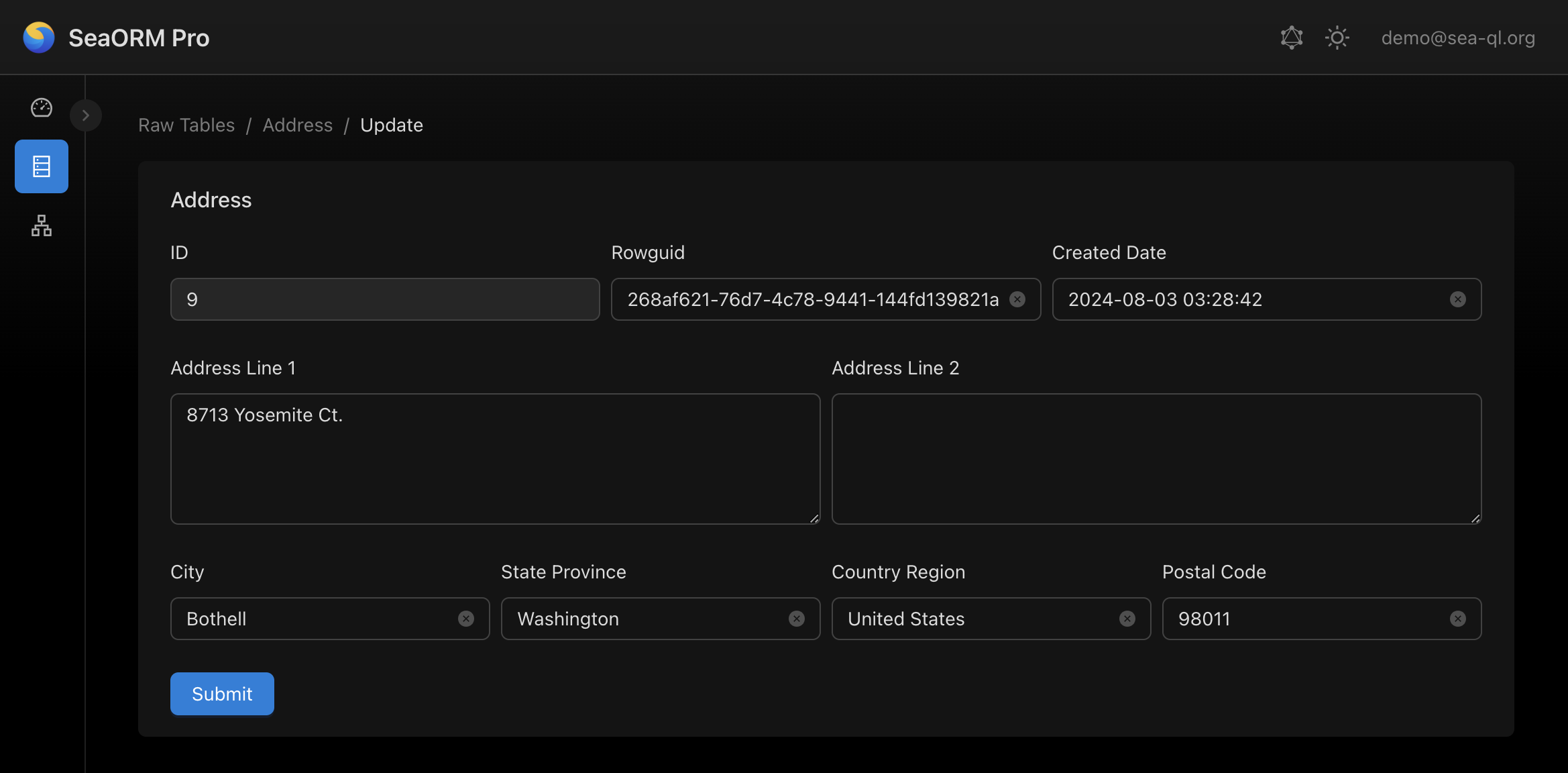

The latest release of SeaORM Pro has a new feature, Model Editor. Instead of updating data in a pop-up dialog, editor offers a dedicated page with deep link to view and update data. It also offers more control to the UI layout.

Sponsor

If you feel generous, a small donation will be greatly appreciated, and goes a long way towards sustaining the organization.

A big shout out to our GitHub sponsors 😇: